Benign Overfitting

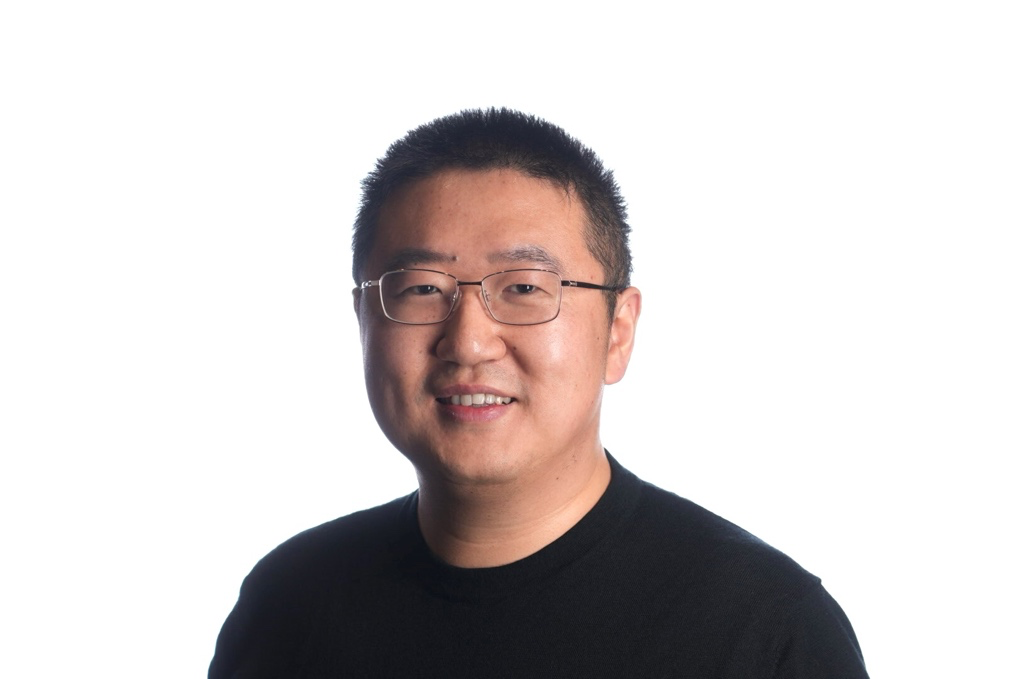

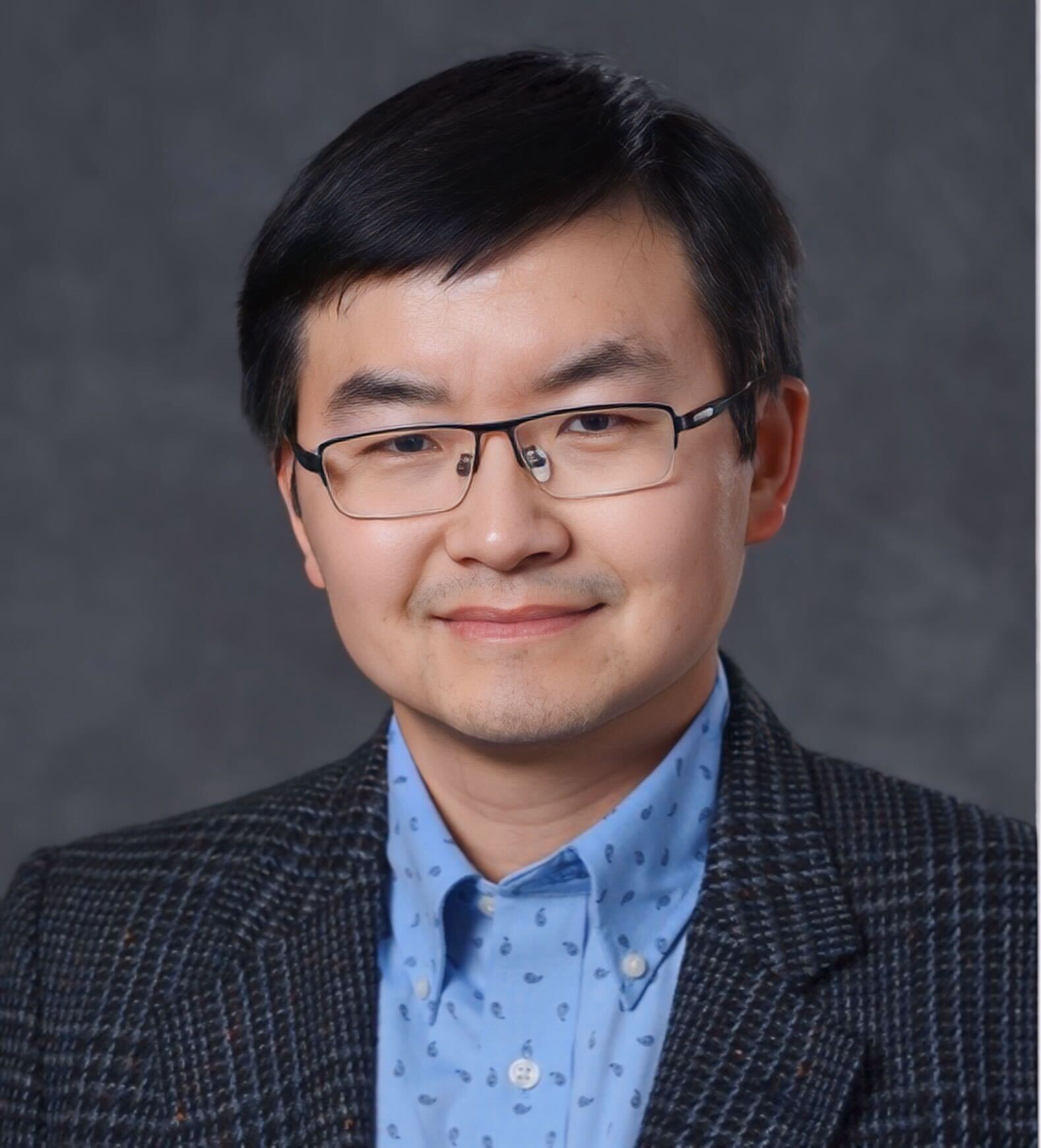

Peter Bartlett

Peter Bartlett is the Associate Director of the Simons Institute for the Theory of Computing and professor in EECS and Statistics at the University of California at Berkeley. His research interests include machine learning and statistical learning theory, and he is the co-author, with Martin Anthony, of the book Neural Network Learning: Theoretical Foundations. He has been Institute of Mathematical Statistics Medallion Lecturer, winner of the Malcolm McIntosh Prize for Physical Scientist of the Year, Australian Laureate Fellow, Fellow of the IMS, Fellow of the ACM, and Fellow of the Australian Academy of Science.

Classical theory that guides the design of nonparametric prediction methods like deep neural networks involves a tradeoff between the fit to the training data and the complexity of the prediction rule. Deep learning seems to operate outside the regime where these results are informative, since deep networks can perform well even with a perfect fit to noisy training data. We investigate this phenomenon of ‘benign overfitting’ in the simplest setting, that of linear prediction. We give a characterization of linear regression problems for which the minimum norm interpolating prediction rule has near-optimal prediction accuracy. The characterization is in terms of two notions of effective rank of the data covariance. It shows that massive overparameterization is essential: the number of directions in parameter space that are unimportant for prediction must significantly exceed the sample size. We discuss implications for deep networks and for robustness to adversarial examples.