Uncertainty Estimation for Multi-view/Multimodal Data

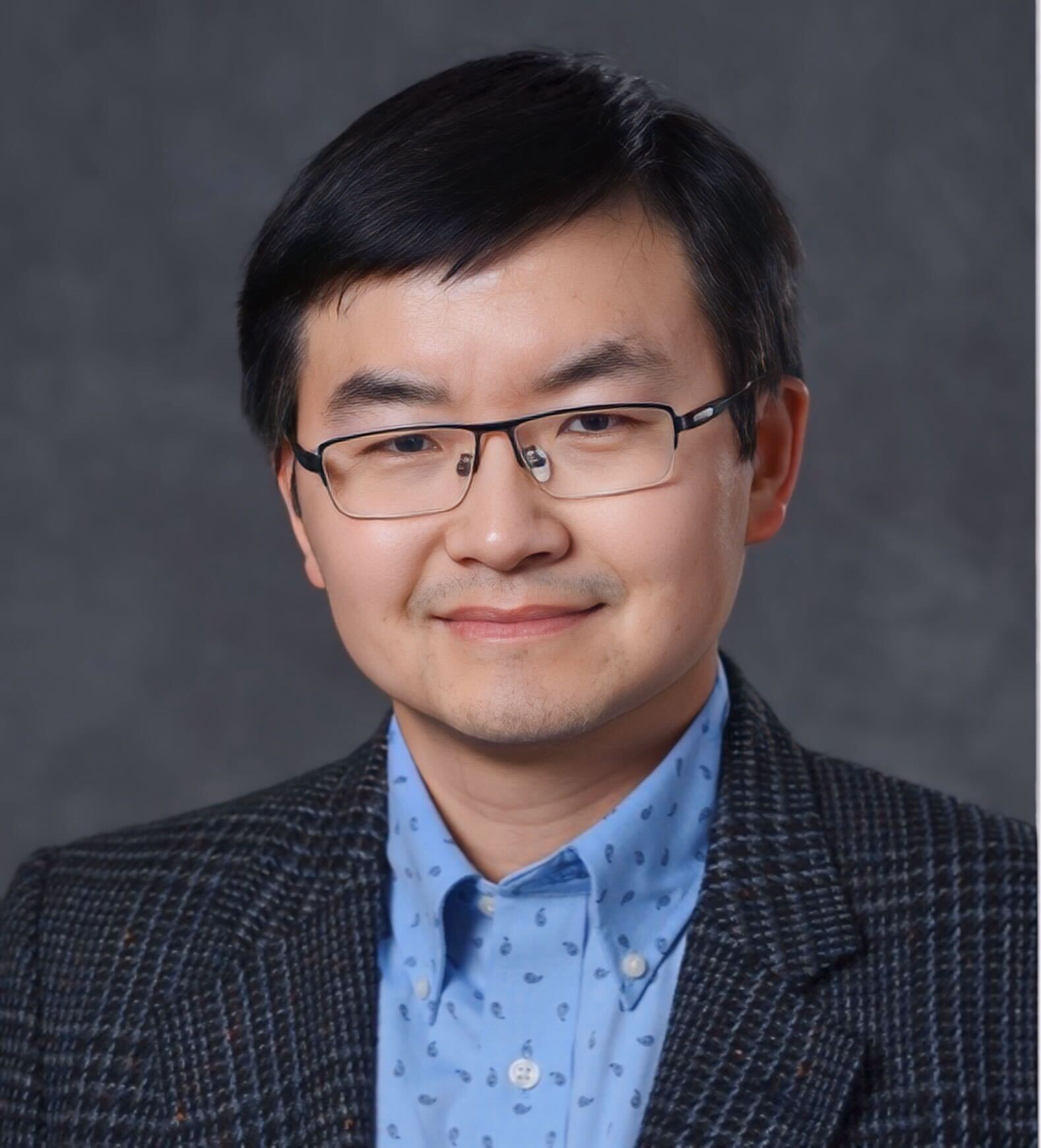

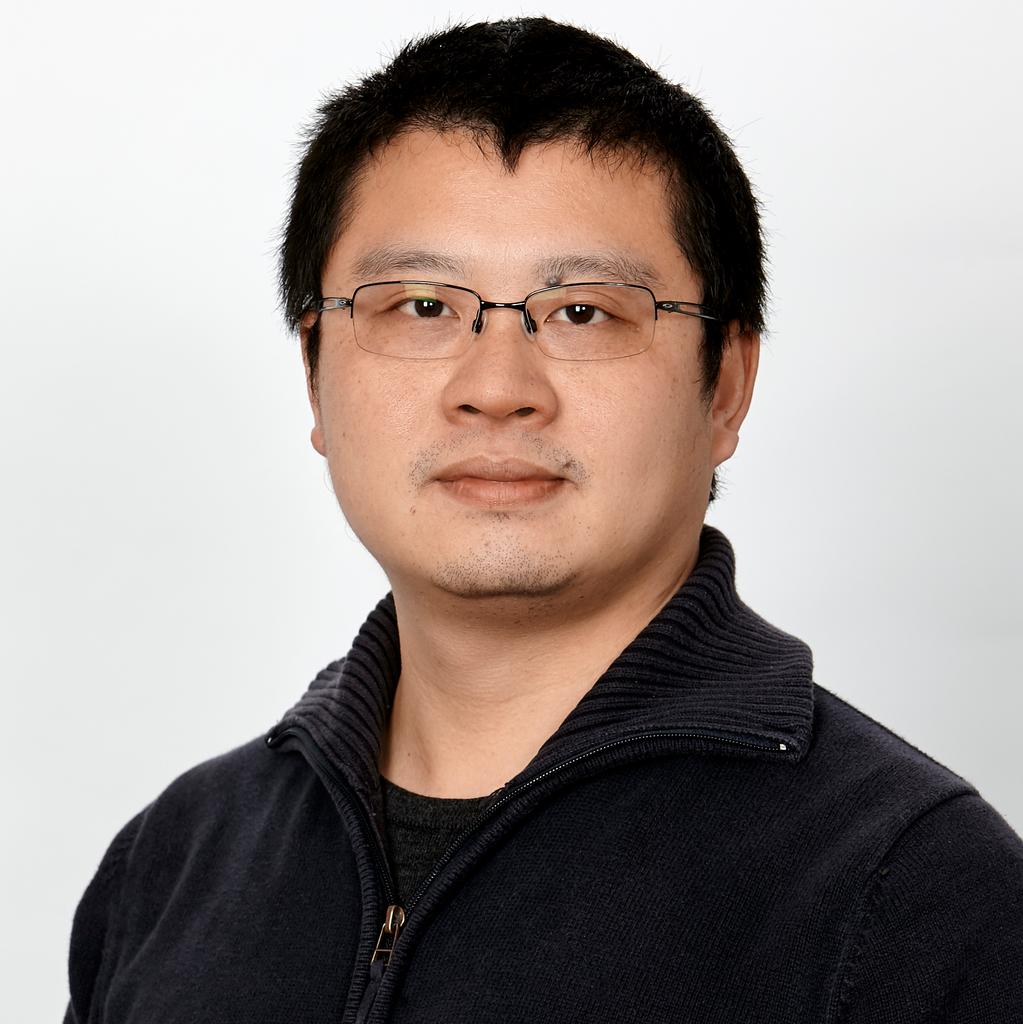

Dr Lan Du

Dr Lan Du is an Associate Professor in Data Science and AI at Monash University, Australia, and a senior member of IEEE. His research interests are centred on the intersection of machine/deep learning and natural language processing, with a particular focus on areas such as active learning, uncertainty estimation, and knowledge distillation. He is also interested in exploring their applications in diverse domains, including public health, marketing, and Science . He has published more than 100 high-quality research papers in almost all top conferences/journals in machine learning, natural language processing and data mining, such as NeurIPS, ICML, ACL, EMNLP, AAAI, TPAMI, IJCV, TMM, and TNNLS. He has been serving as an editorial board member of the Machine Learning journal, ACM transaction on Probabilistic Machine learning, and Big Data Research, an area chair of the AAAI conference on Artificial Intelligence, and a program committee member/reviewer of all the top-tier conferences in machine learning and natural language processing. As a chief investigator, he has successfully secured three government grants, which include one NHMRC ideas grant, one ARC DP grant, and one MRFF grand. The total research funding secured amounts to AUD 3M.

Uncertainty estimation is essential to make neural networks trustworthy in real-world applications. Extensive research efforts have been made to quantify and reduce predictive uncertainty. However, most existing works are designed for unimodal data, whereas multi-view uncertainty estimation has not been sufficiently investigated. Therefore, we propose two new multi-view classification frameworks for better uncertainty estimation and out-of-domain sample detection. One framework associates each view with an uncertainty-aware classifier (Gaussian Processes) and combine the posteriors of all the views with a product of expert models, another adapts Neural Processes to fuse information from different modalities. The experimental results with real-world datasets demonstrate that our frameworks are accurate, reliable, and well-calibrated, which predominantly outperforms the multi-view baselines tested in terms of expected calibration error, robustness to noise, and accuracy for the in-domain sample classification and the out-of-domain sample detection tasks.