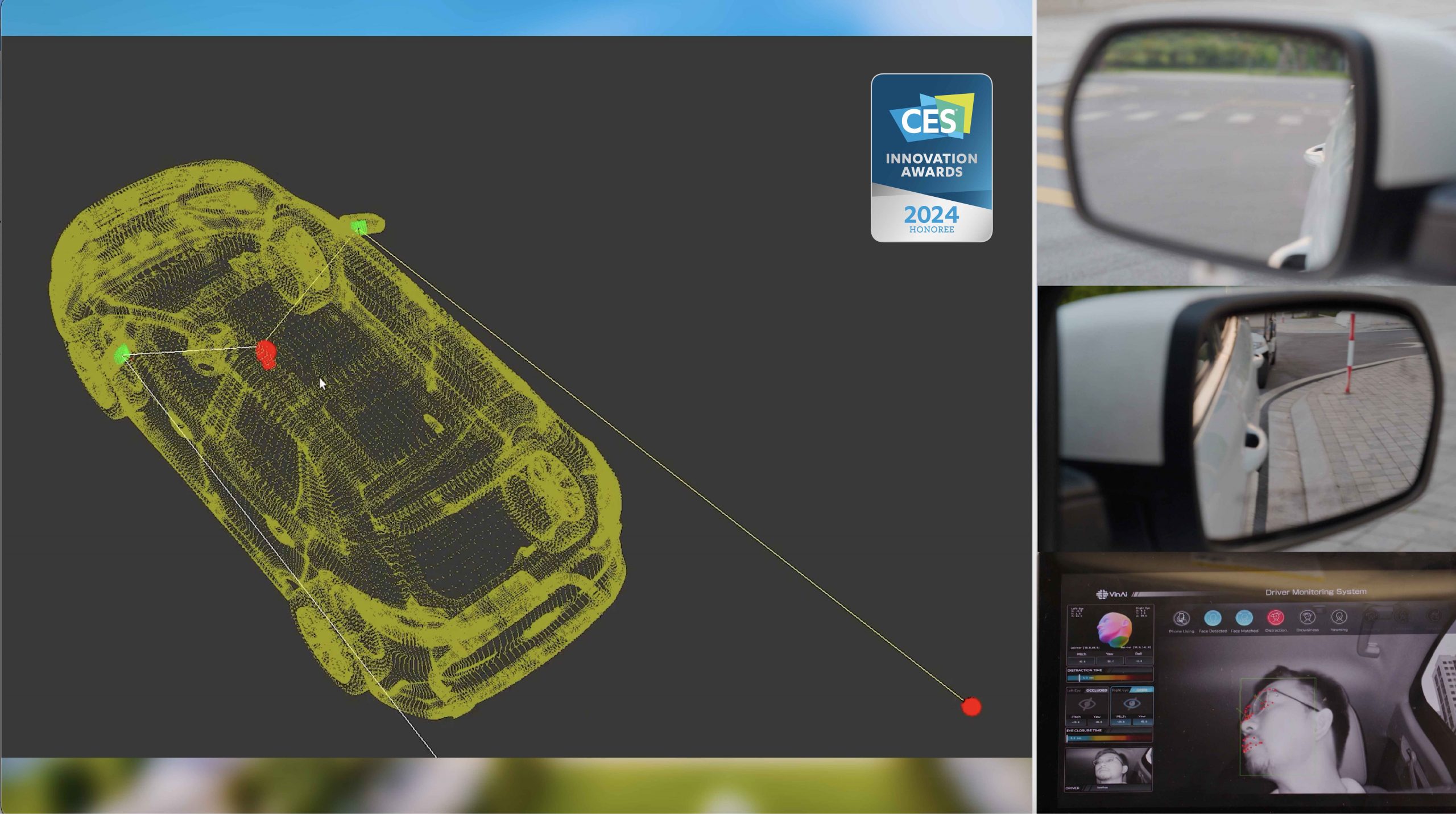

Bringing Intelligence to Every Drive

AI Talent

At VinAI, we believe that great things happen when passionate individuals come together. We're constantly on the lookout for talented, motivated, and creative individuals who can contribute to our success. If you're looking for a dynamic and rewarding career in the technology industry, you've come to the right place.

Join Our Team

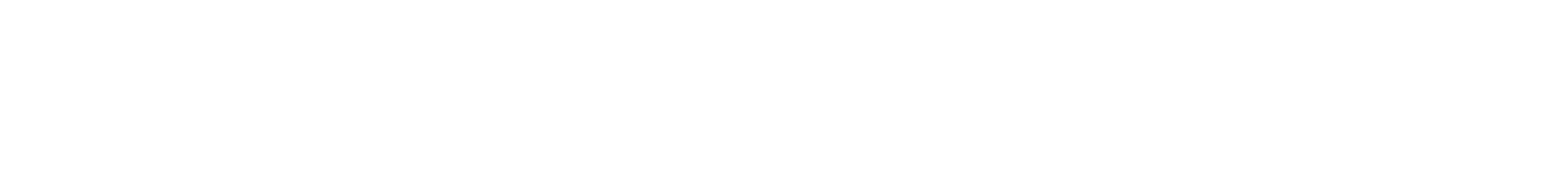

Recognized by Global Partners and Media