Adversarially robust stochastic multi-armed bandits

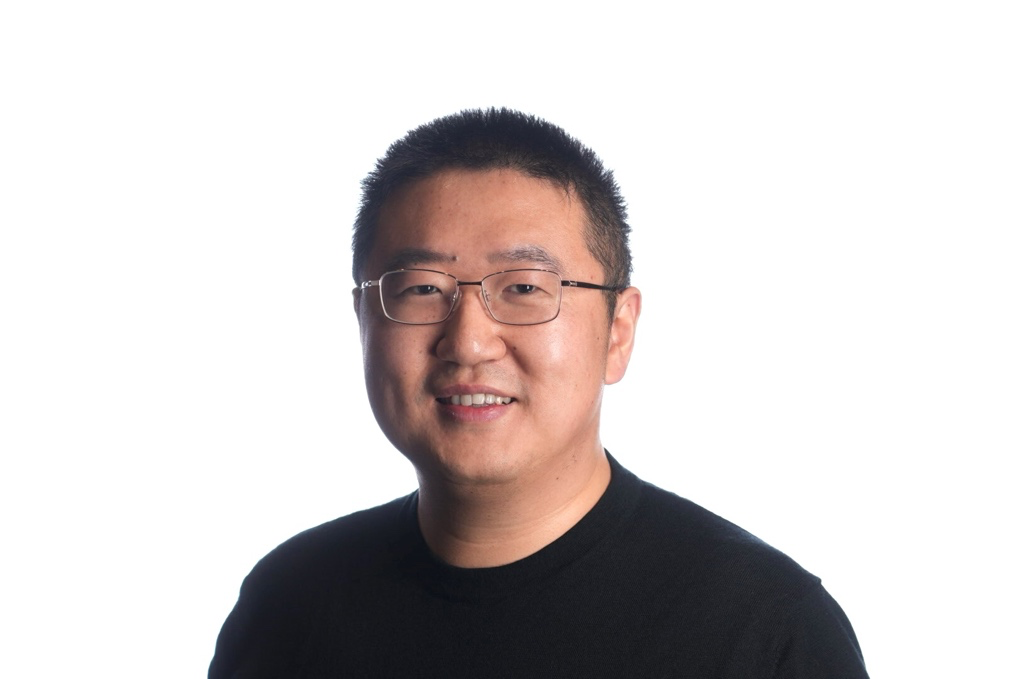

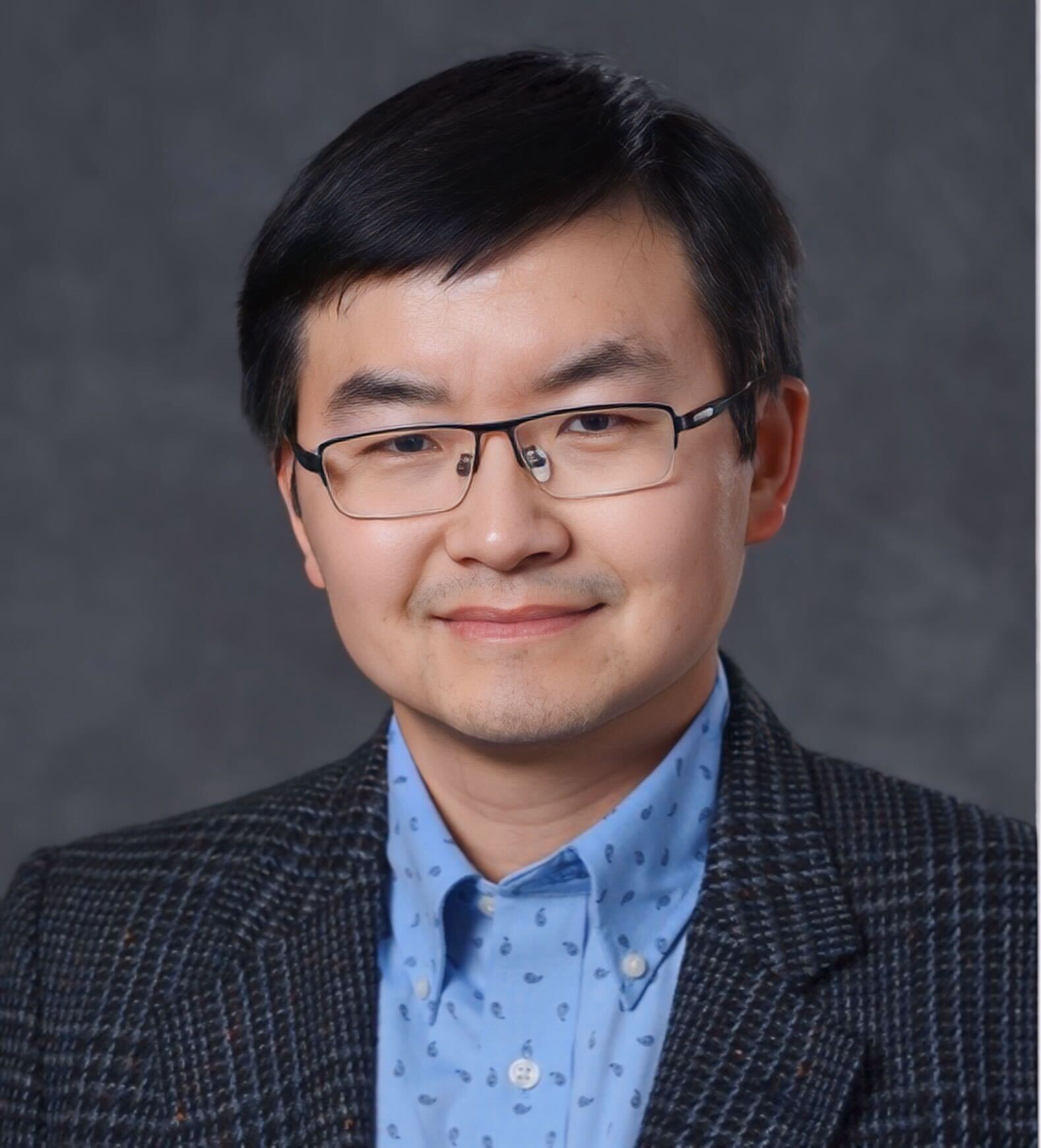

Julian Zimmert

Julian Zimmert received his Masters degree in Mathematics at the Humboldt University of Berlin and is now a final year PhD student at the University of Copenhagen working under supervision of Yevgeny Seldin. His main area of research is robust algorithms for ranges of environments, in particular algorithms for multi-armed bandits in adversarial and stochastic settings. Recently, he did an internship at DeepMind in the Foundations group of Csaba Szepesvari working on a connection between mirror descent and the information theoretic analysis of Thompson sampling.

Multi-armed bandits are a popular framework for optimal experimental design with applications in digital advertising and website optimisation. Traditionally, the bandit literature separates between two distinct forms of environments: The stochastic setting assumes that the data is generated by an i.i.d. process, which allows specialised algorithms to learn quickly. At the other extreme, the adversarial setting only assumes boundedness. This makes learning extremely robust, but comes at the cost of significantly slower convergence to the optimal solution. Real world applications are typically somewhere in between. While it might be reasonable to assume the data is close to i.i.d., the distribution might be influenced by hidden confounders or undergo unforeseen changes. Practically this means that stochastic bandit algorithms might fail even to approach a good solution. This poses a serious dilemma to the practitioners. Should one prioritise fast or robust learning? But why not both? This talk presents a recent breakthrough in practical all-purpose algorithms.