Annotation-Efficient Learning for Object Discovery and Detection

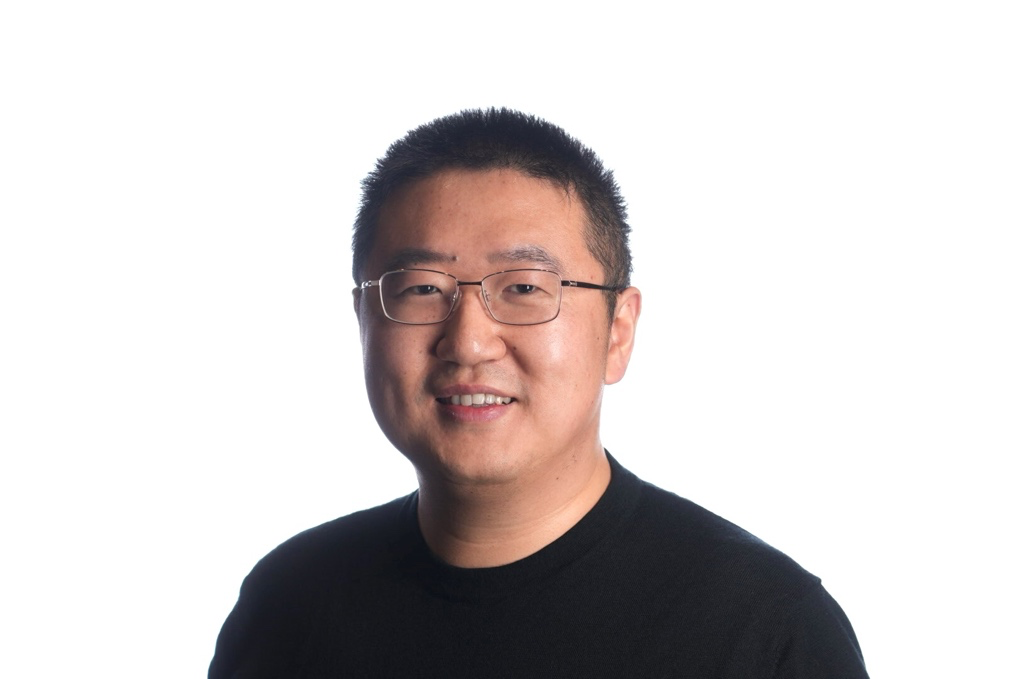

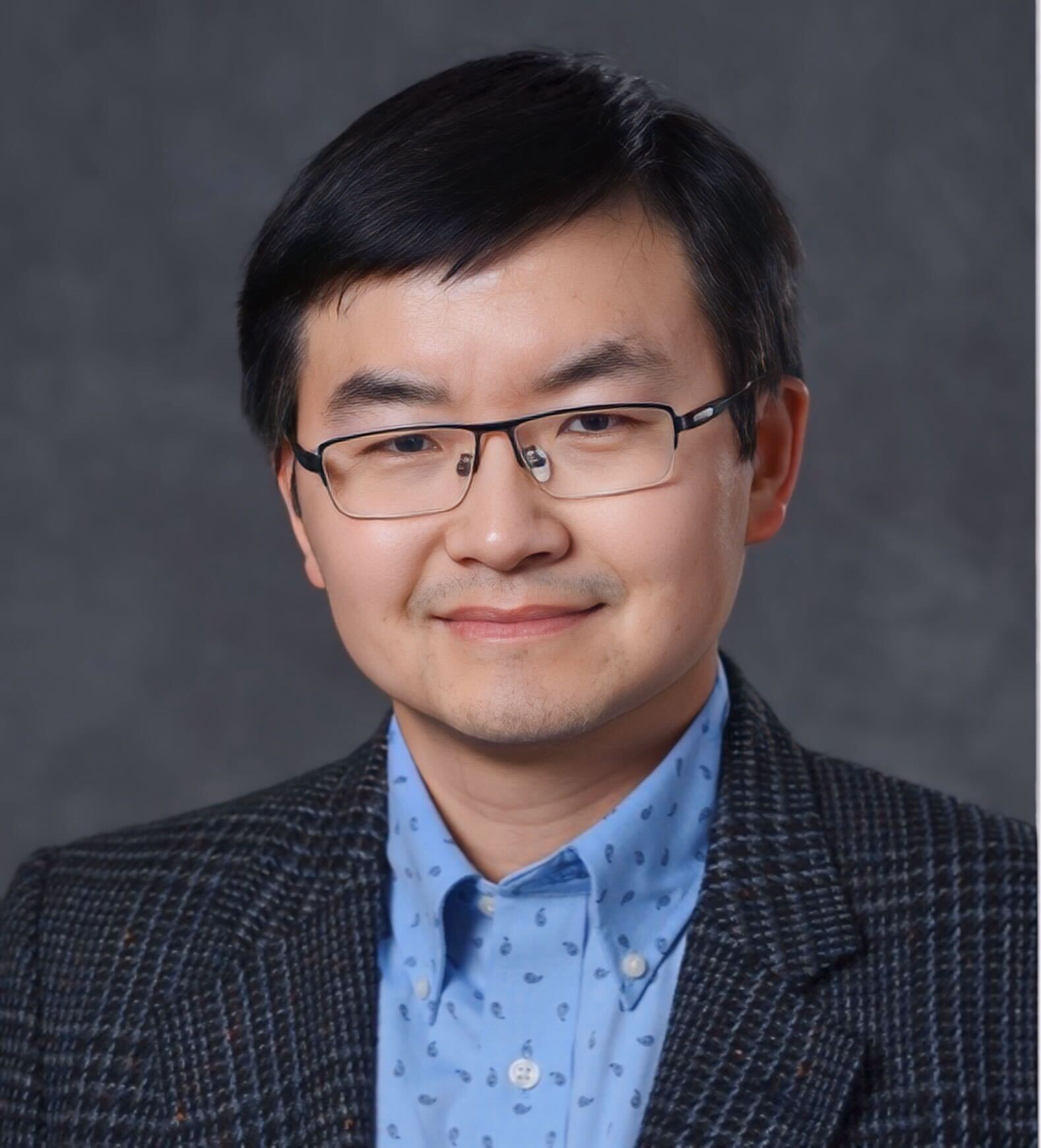

Huy V. Vo

Huy V. Vo is an AI Research Scientist at FAIR Labs, Meta. He obtained his PhD in Computer Science from Ecole Normale Supérieure in 2022. His thesis was prepared in the INRIA’s WILLOW team and Valeo.ai, under the supervision of Jean Ponce (INRIA) and Patrick Pérez (valeo.ai). Prior to his PhD, he obtained the Engineer’s degree on Maths and Computer Science from Ecole Polytechnique in 2017, and the Math-Vision-Machine Learning Master’s degree of Ecole Normale Supérieure Paris-Saclay in 2018. His research interests revolve around learning problems with little or no supervision, including unsupervised object discovery, weakly supervised object detection, active learning and more recently, self-supervised learning.

Object detectors are important components of intelligent systems such as autonomous vehicles or robots. They are typically obtained with fully-supervised training, which requires large manually annotated datasets whose construction is time-consuming and costly. In this talk, I will discuss several alternatives to fully-supervised object detection that work with less or even no manual annotation. I will first focus on the unsupervised object discovery problem, which, given an image collection without manual annotation, aims at identifying pairs of images that contain similar objects and localizing these objects. I will present two optimization-based approaches (OSD, CVPR’19; rOSD, ECCV’20), a ranking method (LOD, NeurIPS’21) and a simple seed-growing approach that exploits features from self-supervised transformers (LOST, BMVC’21) to this problem. In the second part of the talk, I will discuss a practical scenario which combines weakly-supervised and active learning for training an object detector, and introduce BiB (ECCV’22), an active learning strategy tailored for this scenario. I show that our pipeline with BiB offers a better trade-off between annotation cost and effectiveness than both weakly- and fully-supervised object detection.