Efficient Model Development in the Era of Large Language Models

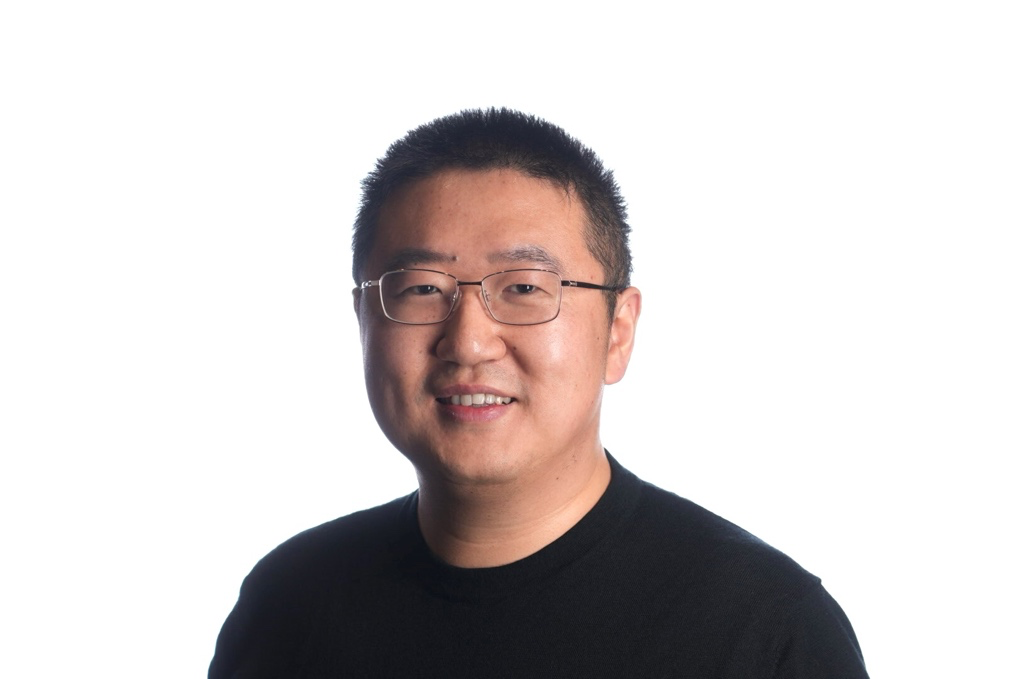

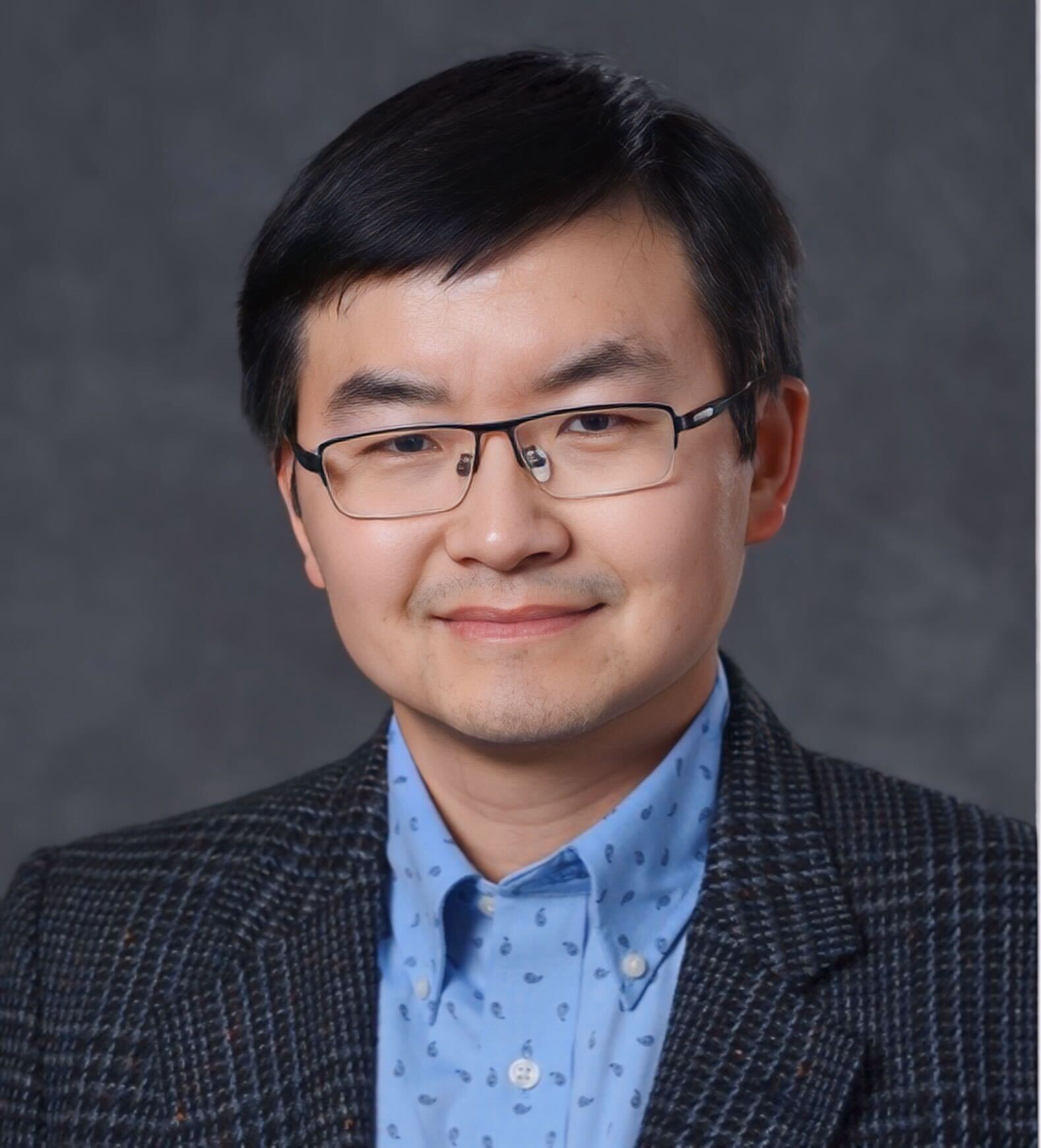

Dr. Tu Vu

Dr. Tu Vu is an Assistant Professor in the Department of Computer Science at Virginia Tech. Prior to joining Virginia Tech, he held the position of Research Scientist at Google DeepMind for a year after receiving his PhD in Computer Science from the University of Massachusetts Amherst. Vu’s research aims to develop effective and efficient methods for advancing and democratizing artificial intelligence in the era of large language models. During his PhD, Vu worked as a student researcher at Google DeepMind, contributing to the development of models like Gemini and Flan-T5. His work on parameter-efficient transfer learning through soft prompts was widely adopted within Google for tuning large language models.

The development of modern large language models often involves a post-training stage where a pre-trained model is fine-tuned on a large multitask mixture to improve various capabilities such as instruction following, reasoning, and coding. This raises two key research questions: 1) How can we determine the optimal dataset proportions for the mixture efficiently? and 2) How can we integrate new capabilities into the model without sacrificing performance on existing ones? In this talk, I will present two of my recent research papers that shed light on these questions. First, I will discuss our work on developing Foundational Large Auto-rater Models (FLAMe), which introduces a novel tail-patch fine-tuning approach to evaluate the impact of each dataset on targeted distributions. This approach enables us to determine the optimal dataset proportions, yielding competitive results compared to example-proportional mixing, while significantly reducing computational costs. Second, I will cover our latest work on large-scale model merging, which proposes a promising solution for efficiently incorporating new capabilities: as model capability and capacity grow, merging preserves most of the performance on existing capabilities while significantly enhancing zero-shot generalization to novel tasks, often matching or exceeding the performance of multitask training. Taken as a whole, I hope these results will spur more fundamental research into the efficient development of large language models.