Faster saddle-point optimization for solving large-scale Markov decision processes

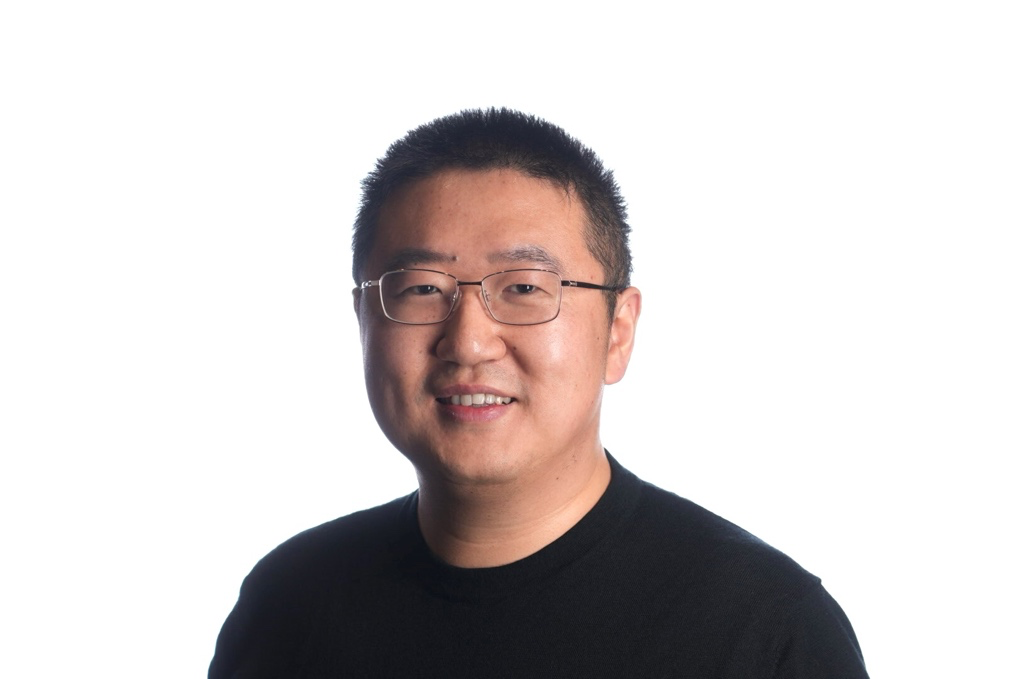

Joan Bas Serrano

Joan Bas Serrano is a PhD student and teaching assistant in the Artificial Intelligence and Machine Learning group at Universitat Pompeu Fabra (UPF). Under the supervision of Gergely Neu, he’s currently working on theoretical reinforcement learning. He is mainly interested in the development of reinforcement learning algorithms with a solid mathematical basis and strong theoretical guarantees. His current research is based on the linear programming formulation of MDPs and the saddle-point optimization theory.

He obtained his bachelor’s in Physics at Universitat Autònoma de Barcelona in 2016 and his M.Sc. at UPF in 2017, cursing the program “Master in Intelligent and Interactive Systems”. He did his master thesis titled “ A generative model of user activity in the Integrated Learning Design Environment“ under the advice of Vicenç Gomez and Davinia Hernandez-Leo.

We consider the problem of computing optimal policies in average-reward Markov decision processes. This classical problem can be formulated as a linear program directly amenable to saddle-point optimization methods, albeit with a number of variables that is linear in the number of states. To address this issue, recent work has considered a linearly relaxed version of the resulting saddle-point problem. Our work aims at achieving a better understanding of this relaxed optimization problem by characterizing the conditions necessary for convergence to the optimal policy, and designing an optimization algorithm enjoying fast convergence rates that are independent of the size of the state space. Notably, our characterization points out some potential issues with previous work.