Implicit Deep Learning

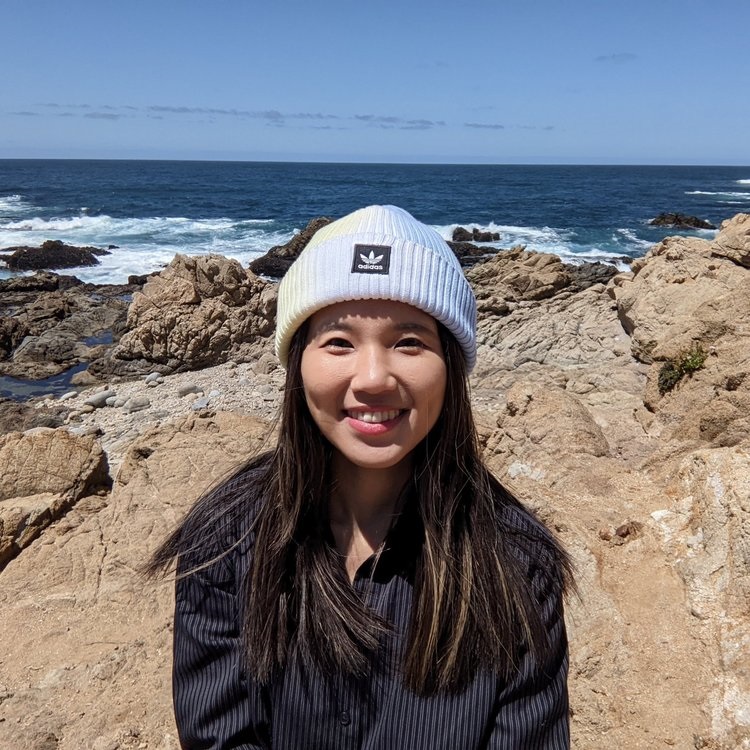

Alicia Tsai

Alicia is currently a 3rd year Ph.D. candidate at the Department of Electrical Engineering and Computer Science at UC Berkeley and a research assistant at the College of Engineering and Computer Science at VinUniversity. She is formally advised by Laurent El Ghaoui and affiliated with Berkeley Artificial Intelligence Research (BAIR) Lab and NSF Artificial Intelligence Research Institute for Advances in Optimization (AI4OPT). Her research interests broadly lie in optimization, natural language processing, and machine learning.

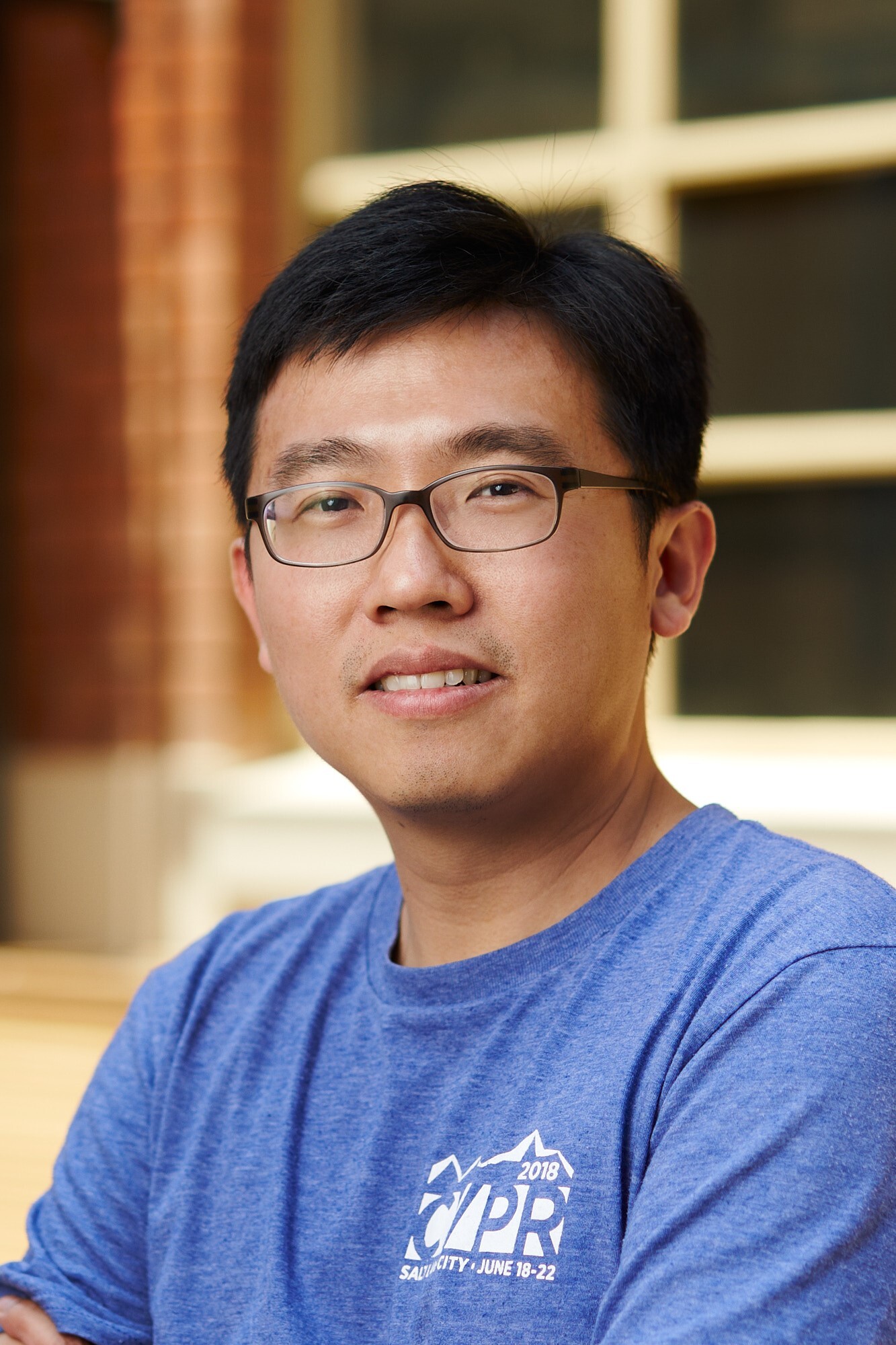

Recently, prediction rules based on so-called implicit models have emerged as a new, high-potential paradigm in deep learning. These models rely on an “equilibrium” equation to define the prediction, instead of a recurrence through multiple layers. Currently, even very complex deep learning models are based on a “feedforward” structure, without loops, and as such the popular term “neural” applied to such models is not fully warranted, since the brain itself possesses loops. Allowing for loops may be the key to describing complex higher-level reasoning, which has so far eluded the deep learning paradigms. However, it raises the fundamental issue of well-posedness, since there may be no or multiple solutions to the corresponding equilibrium equation. In this talk, I will review some aspects of implicit models, starting from a unifying “state-space” representation that greatly simplifies notation. I will also introduce various training problems of implicit models, including one that allows convex optimization, and their connections to topics such as architecture optimization, model compression, and robustness. In addition, the talk will show the potential of implicit models through experimental results on various problems such as parameter reduction, feature elimination, and mathematical reasoning tasks.