LLMs FTW

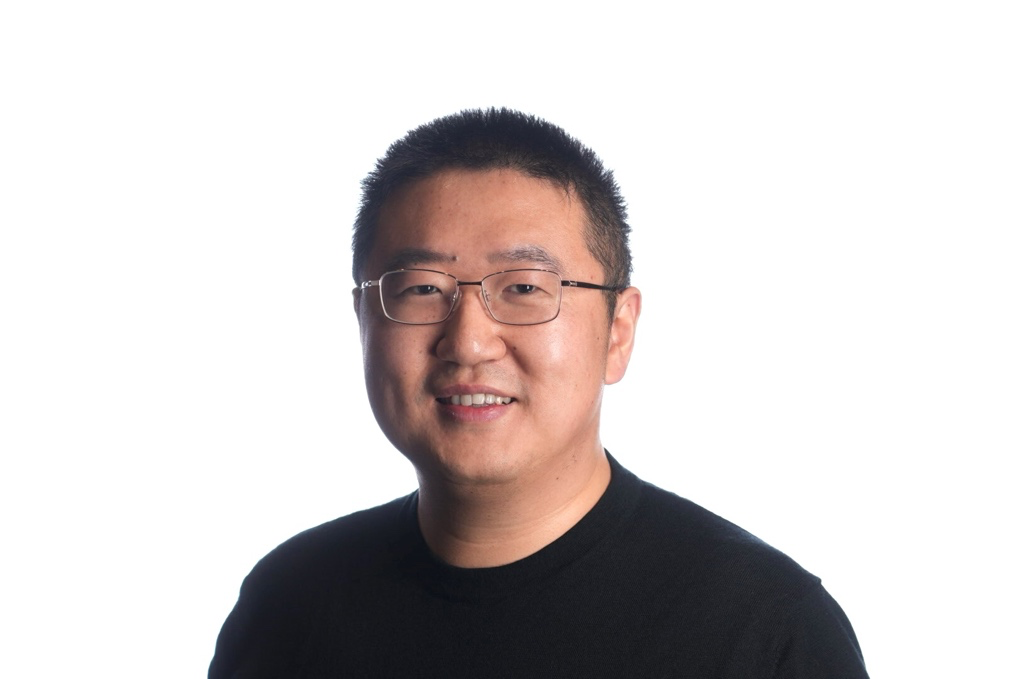

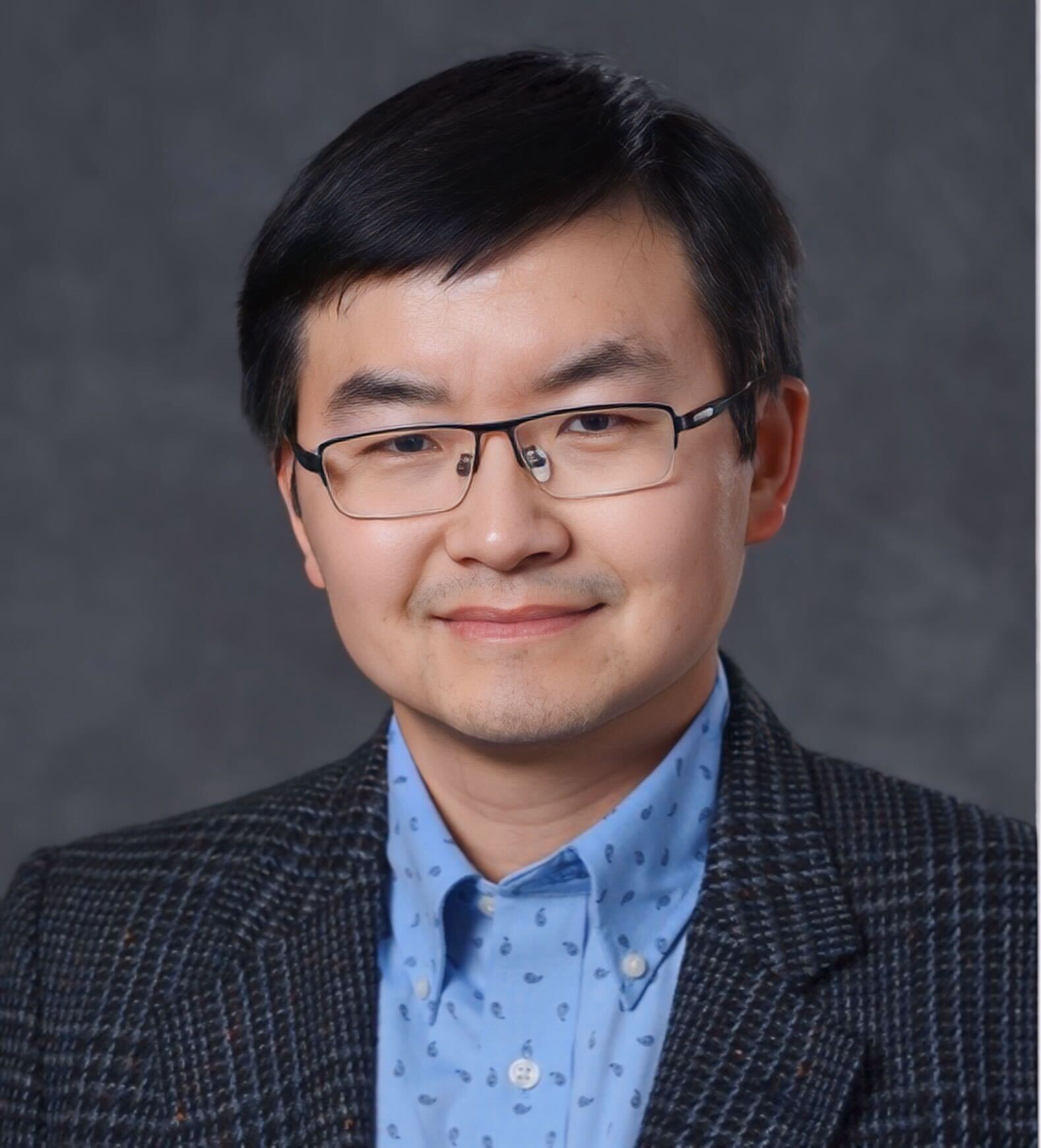

Tim Baldwin

Tim Baldwin is Acting Provost and Professor of Natural Language Processing at Mohamed bin Zayed University of Artificial Intelligence (MBZUAI), in addition to being a Melbourne Laureate Professor in the School of Computing and Information Systems, The University of Melbourne.

Tim completed a BSc (CS/Maths) and BA (Linguistics/Japanese) at The University of Melbourne in 1995, and an MEng(CS) and PhD(CS) at the Tokyo Institute of Technology in 1998 and 2001, respectively. He joined MBZUAI at the start of 2022, prior to which he was based at The University of Melbourne for 17 years. His research has been funded by organisations including the Australian Research Council, Google, Microsoft, Xerox, ByteDance, SEEK, NTT, and Fujitsu, and has been featured in MIT Tech Review, Bloomberg, Reuters, CNN, IEEE Spectrum, The Times, ABC News, The Age/Sydney Morning Herald, and Australian Financial Review. He is the author of nearly 500 peer-reviewed publications across diverse topics in natural language processing and AI, with over 22,000 citations and an h-index of 72 (Google Scholar), in addition to being an ARC Future Fellow, and the recipient of a number of awards at top conferences.

Large language models (LLMs) have taken the world by storm, with a very strong focus on the English language, a worrying concentration of efforts among commercial players, and diminishingly little transparency in how models are trained. In this talk, I will present work done at MBZUAI on LLMs, with a focus on issues in training LLMs for languages other than English, and a major push to increase transparency in all aspects of model training, selection, and evaluation.