Nonlinear Statistical Modeling for High-Dimensional and Small Sample Data

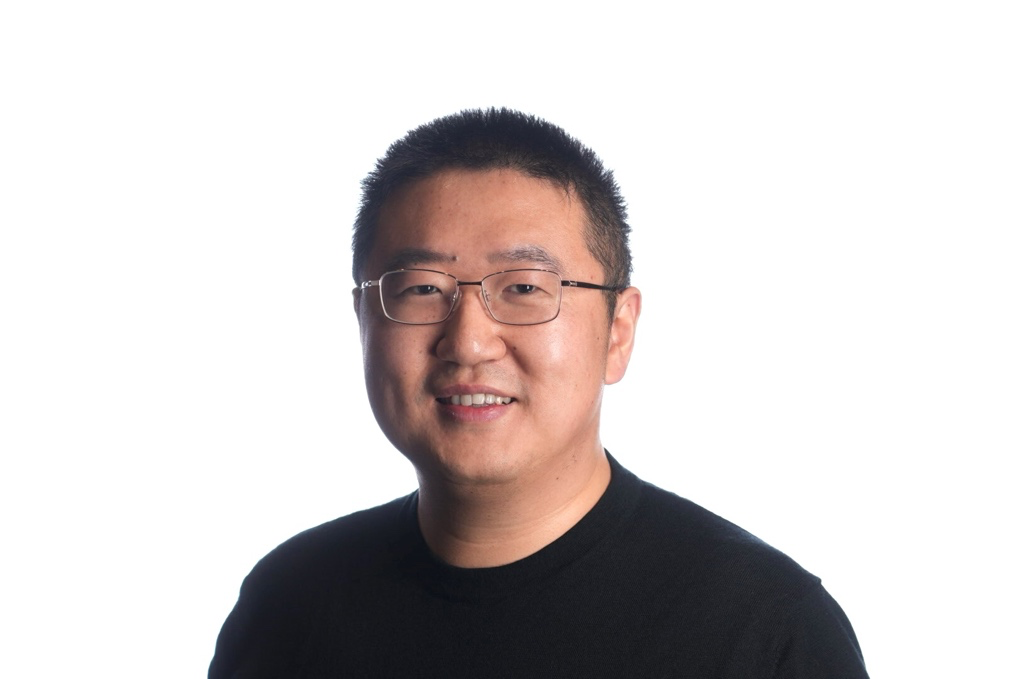

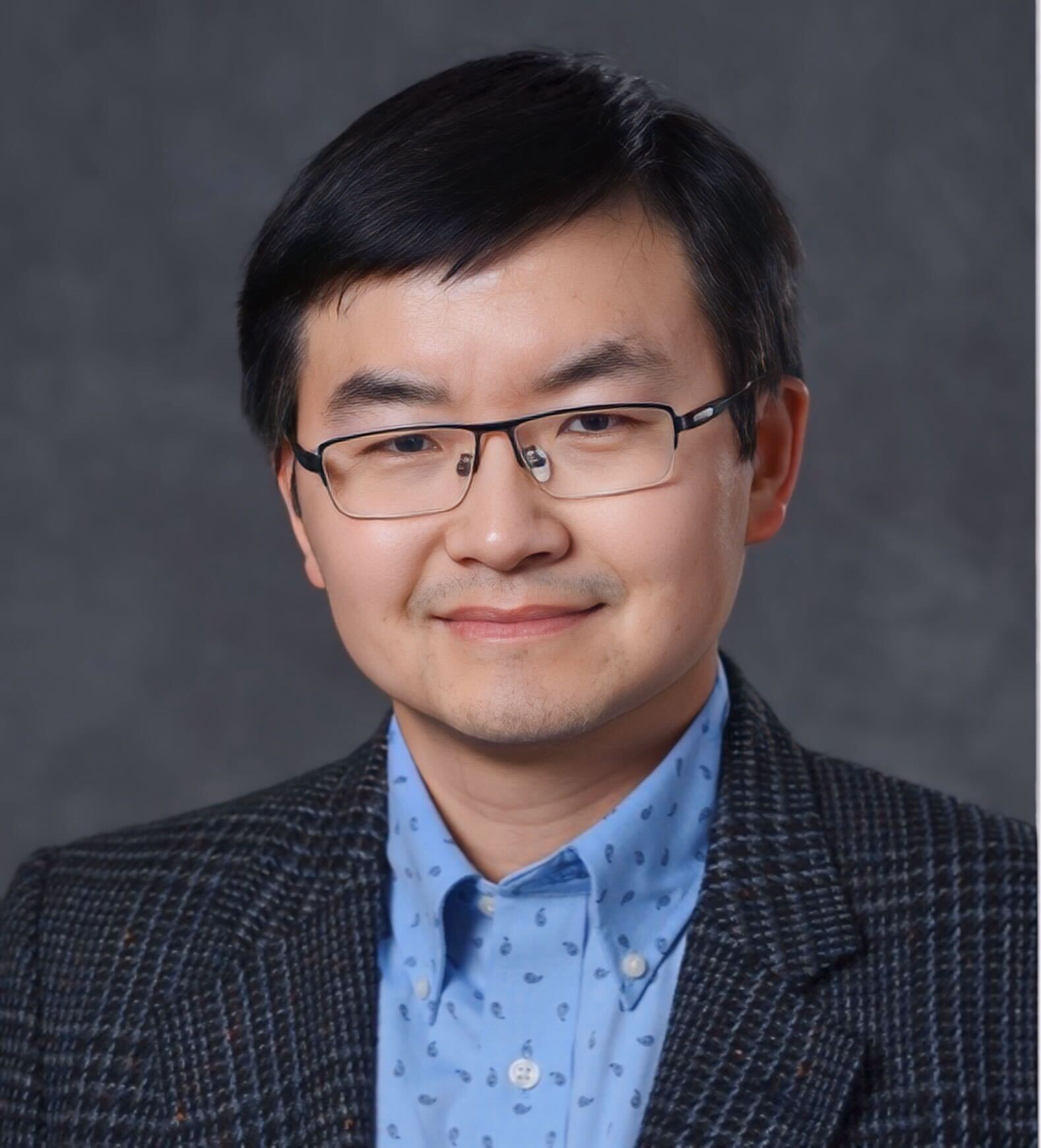

Makoto Yamada

Makoto Yamada received the PhD degree in statistical science from The Graduate University for Advanced Studies (SOKENDAI, The Institute of Statistical Mathematics), Tokyo, in 2010. He has held positions as a postdoctoral fellow with the Tokyo Institute of Technology from 2010 to 2012, as a research associate with NTT Communication Science Laboratories from 2012 to 2013, as a research scientist with Yahoo Labs from 2013 to 2015, and as an assistant professor with Kyoto University from 2015 to 2017. Currently, he is a team leader at RIKEN AIP and an associate professor at Kyoto University. His research interests include machine learning and its application to biology, natural language processing, and computer vision. He published more than 30 research papers in premium conferences and journals and won the WSDM 2016 Best Paper Award.

Feature selection/variable selection is an important machine learning problem, and it is widely used for various types of applications such as gene selection from microarray data, document categorization, and prosthesis control, to name a few. The feature selection problem is a fundamental and traditional machine learning problem, and thus there exist many methods including the least absolute shrinkage and selection operator (Lasso). However, there are a few methods that can select features from large and ultra high-dimensional data (more than a million features) in a nonlinear way. In this talk, we introduce several nonlinear feature selection methods for high-dimensional and small sample data including HSIC Lasso [1,2,3], kernel PSIs [4,5,6,7], and the feature selection networks [8].