Provable Offline Reinforcement Learning: Neural Function Approximation, Randomization, and Sample Complexity

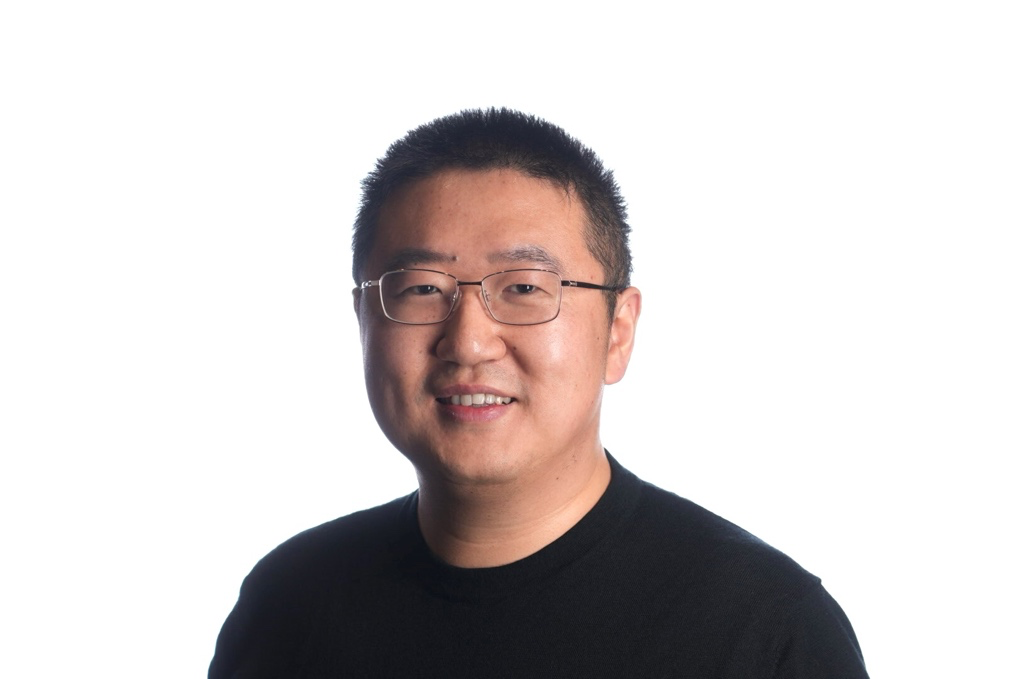

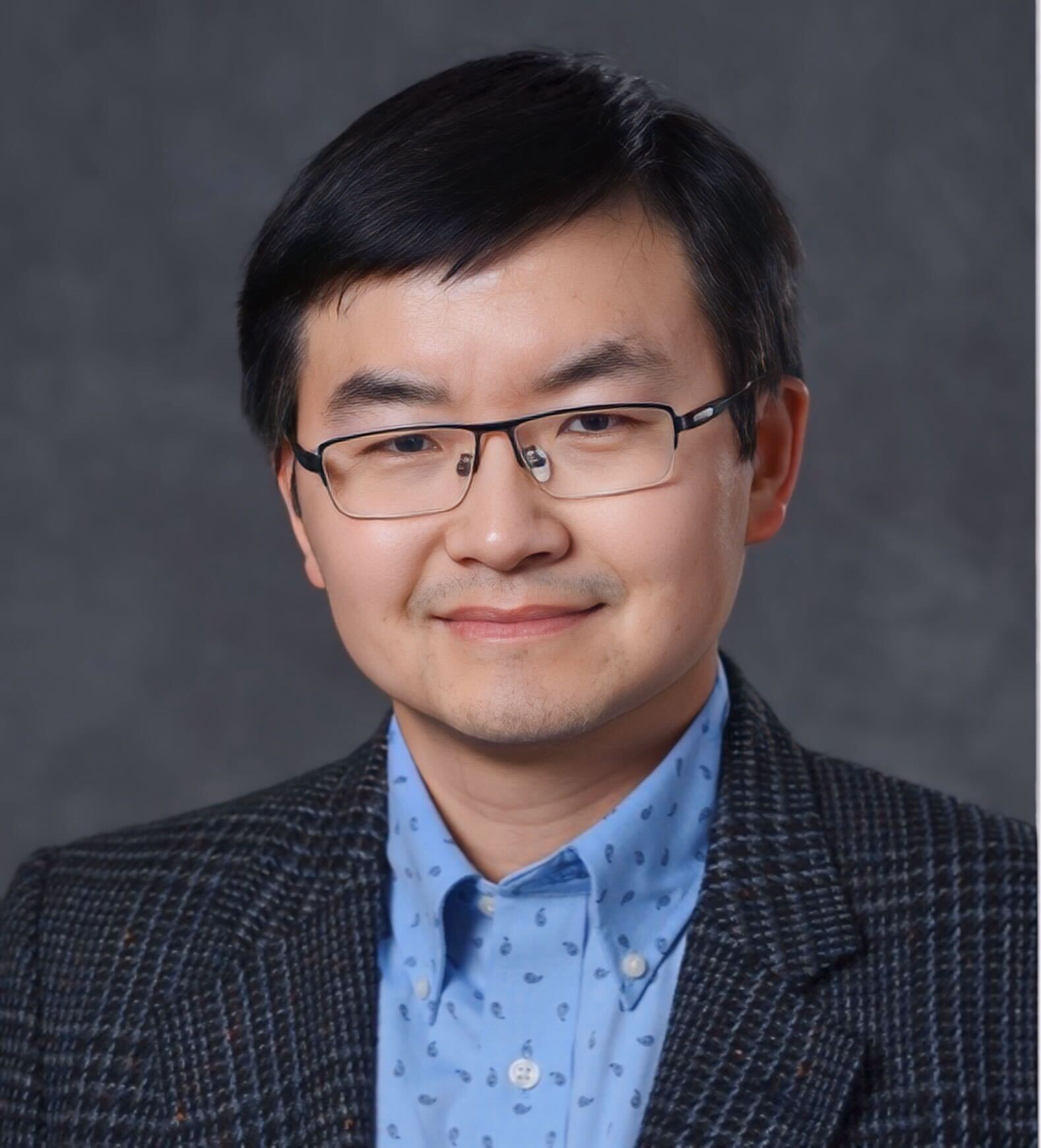

Thanh Nguyen-Tang

Thanh Nguyen-Tang is a postdoctoral research fellow in the Department of Computer Science at Johns Hopkins University. His research focuses on algorithmic and theoretical foundations of modern machine learning, aiming to build data-efficient, deployment-efficient, and robust AI systems. He has published his works in various top-tier conferences in machine learning including NeurIPS, ICLR, AISTATS, and AAAI. Thanh finished his Ph.D. in Computer Science at the Applied AI Institute at Deakin University, Australia.

In this talk, Thanh will share some of his recent results on offline reinforcement learning (RL), an RL paradigm for domains where exploration is prohibitively expensive or even implausible, but a fixed dataset of previous experiences is available a priori. Specifically, he will focus on discussing how deep neural networks (trained by (stochastic) gradient descents) and randomization lead to a computationally efficient algorithm that has a strong theoretical guarantee for generalization across large state spaces under mild assumptions of distributional shifts while obtaining a favorable empirical performance. He will conclude with a discussion on future directions to make RL more data-efficient, deployment-efficient, and robust.