Recent Progress on Grokking and Probabilistic Federated Learning

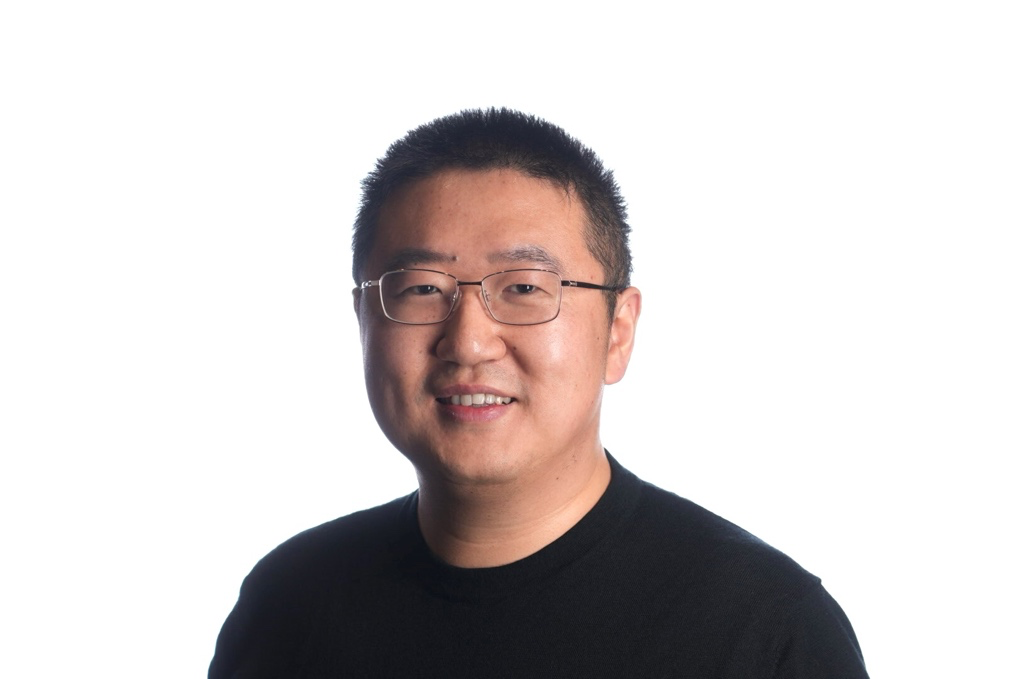

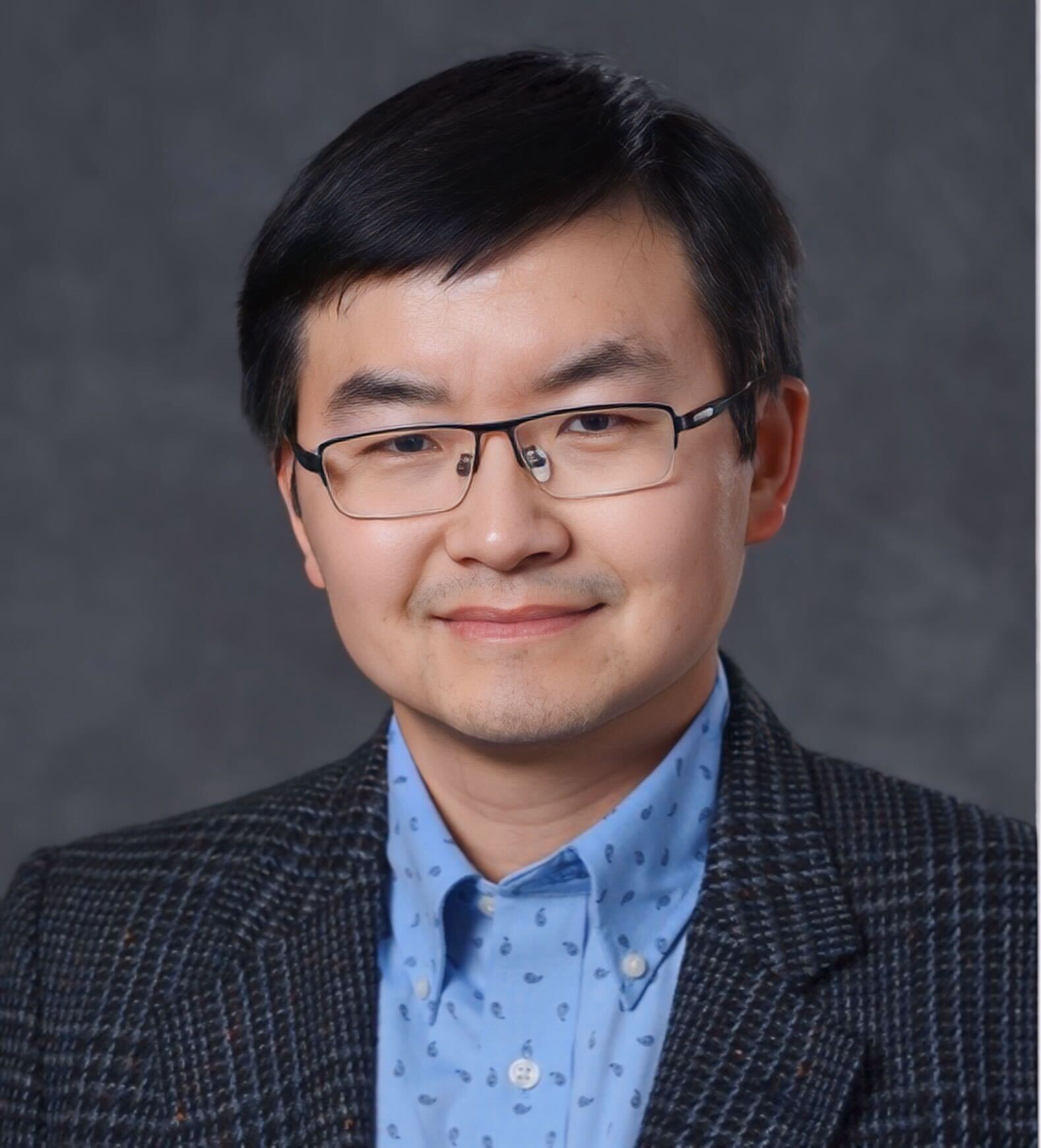

Thang D. Bui

Thang Bui is a lecturer in Machine Learning at the School of Computing, Australian National University. He is broadly interested in machine learning and statistics with a particular focus on neural networks, probabilistic models, approximate Bayesian inference, and sequential decision making under uncertainty.

This talk is divided into two parts. The first part delves into recent empirical observations on the grokking phenomenon, where neural networks achieve perfect or near-perfect accuracy on the validation set long after similar performance has been attained on the training set. We demonstrate that grokking is not limited to neural networks but occurs in other settings such as Gaussian process (GP) classification, GP regression and linear regression. Our hypothesis suggests that the phenomenon is governed by the accessibility of certain regions in the error and complexity landscapes. In the second part, I will discuss federated training of probabilistic models, specifically Bayesian neural networks and Gaussian processes, employing partitioned variational inference. Additionally, I will highlight some pitfalls of current techniques and discuss potential future directions.