Robust Multivariate Time-Series Forecasting: Adversarial Attacks and Defense Mechanisms

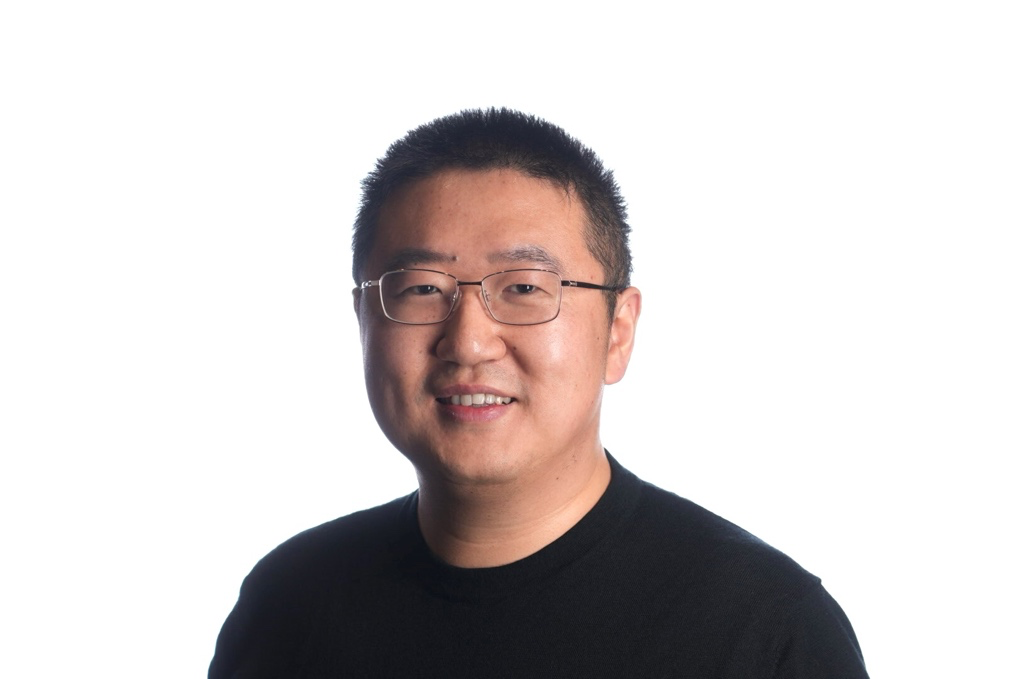

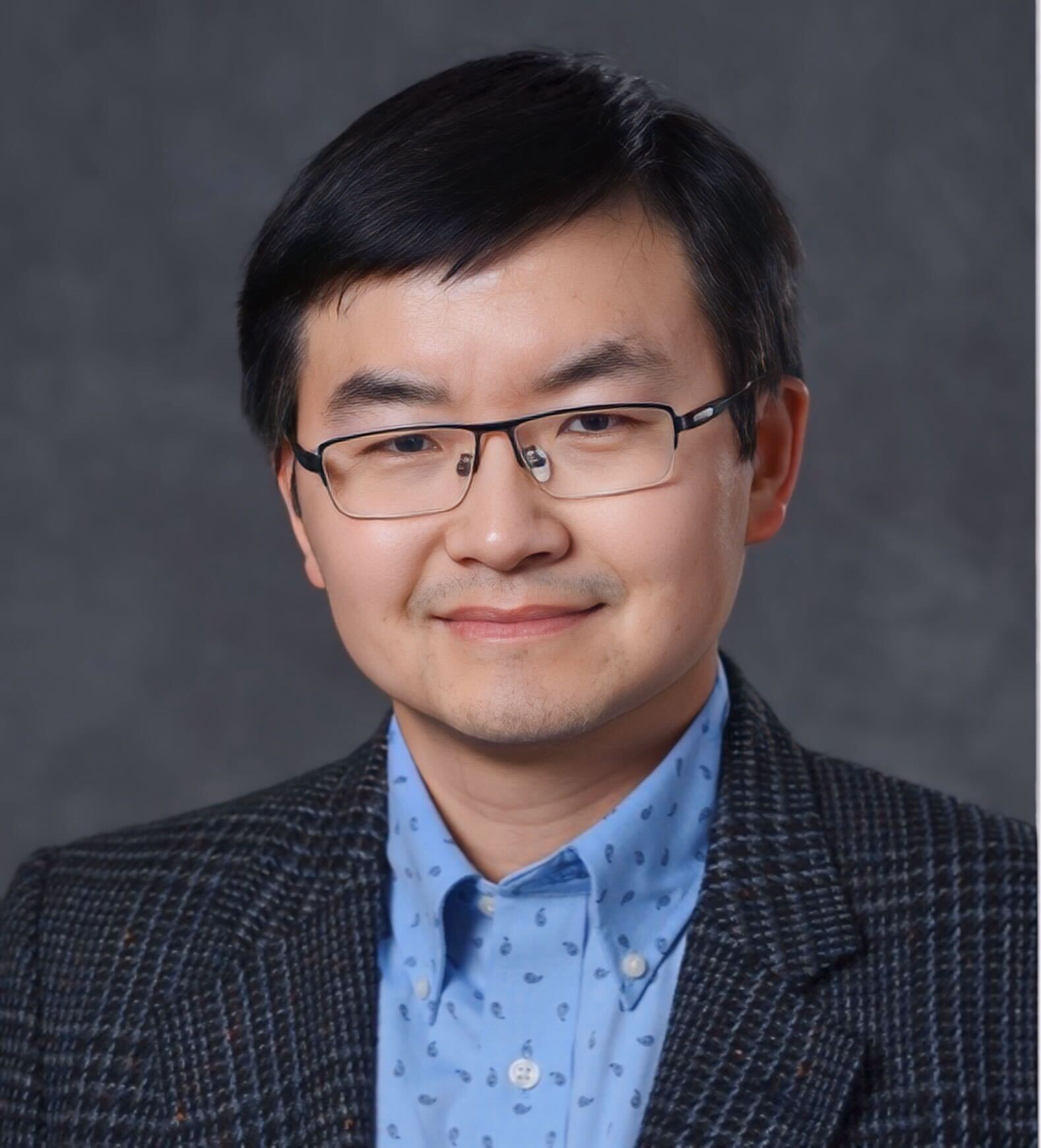

Nghia Hoang

Dr. Hoang is an Assistant Professor at Washington State University. Dr. Hoang received his Ph.D. in 2015 from the National University of Singapore (NUS), worked as a research fellow at NUS (2015-2017) and then as a postdoctoral research associate at MIT (2017-2018). Following that, Dr. Hoang joined the MIT-IBM Watson AI Lab as a research staff member and principal investigator. Later, Dr. Hoang moved to the AWS AI Labs of Amazon as a Senior Research Scientist (2020-2022). In early 2023, Dr. Hoang joined the faculty of the Electrical Engineering and Computer Science school of Washington State University. Dr. Hoang has been publishing and serving very actively as PC, senior PC or Area Chair in the key outlets in ML/AI such as ICML, NeurIPS, ICLR, AAAI, IJCAI, UAI, ECAI. He is also an editorial member of Machine Learning Journal and an action editor of Neural Networks. His current research interests broadly span the areas of probabilistic machine learning, with a specific focus on distributed and federated learning.

In this talk, I will discuss the threats of adversarial attack on multivariate probabilistic forecasting models and viable defense mechanisms. Our studies discover a new attack pattern that negatively impact the forecasting of a target time series via making strategic, sparse (imperceptible) modifications to the past observations of a small number of other time series. To mitigate the impact of such attack, we have developed two defense strategies. First, we extend a previously developed randomized smoothing technique in classification to multivariate forecasting scenarios. Second, we develop an adversarial training algorithm that learns to create adversarial examples and at the same time optimizes the forecasting model to improve its robustness against such adversarial simulation. Extensive experiments on real-world datasets confirm that our attack schemes are powerful and our defense algorithms are more effective compared with baseline defense mechanisms. This talk is based on our recent published work at ICLR-23.