Mean field limit in neural network learning

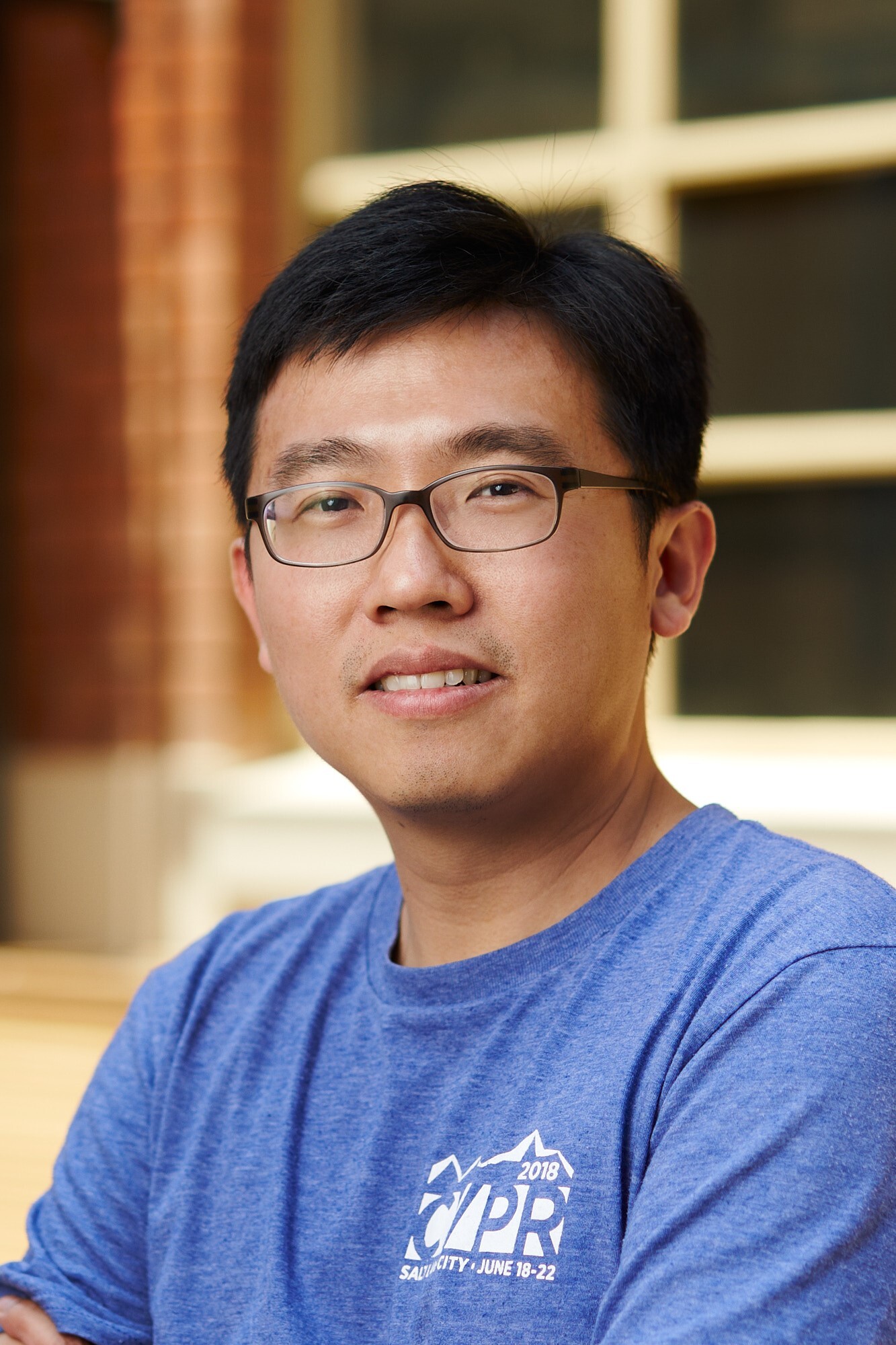

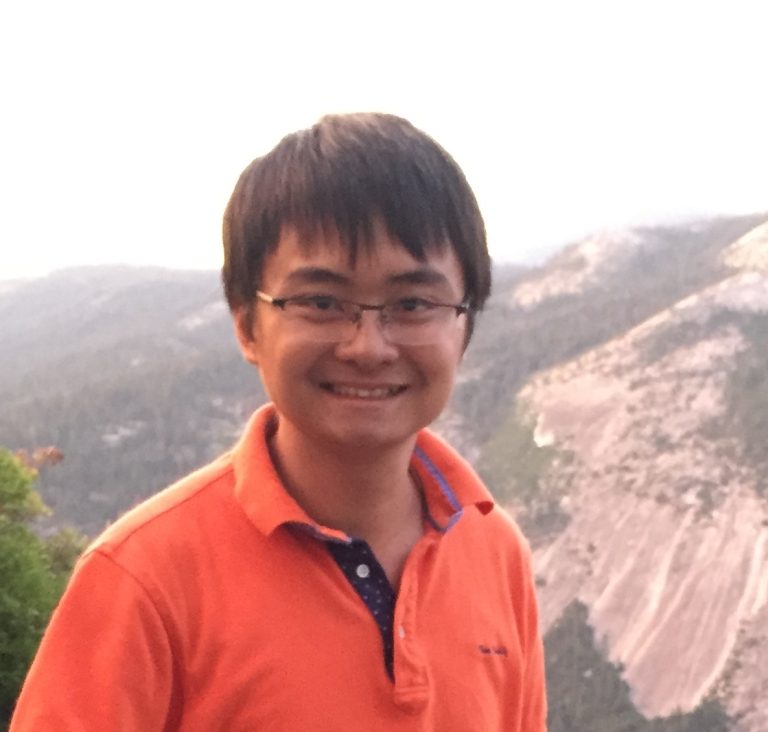

Phan-Minh Nguyen

Phan-Minh Nguyen (Nguyễn Phan Minh) obtained recently his PhD in Electrical Engineering from Stanford University, advised by Andrea Montanari, and previously his bachelor’s degree from the National University of Singapore. Over the years, his works have spanned from information and coding theory to statistical inference, and more recently, theoretical aspects of neural networks. His research fuses some imagination as a highschool physics competitor, some flexibility from an engineering education, and some rigor from his years struggling with maths. He now works in the finance industry at the Voleon Group.

Neural networks are among the most powerful classes of machine learning models, but their analysis is notoriously difficult: their optimization is highly non-convex, preventing one from decoupling the optimization aspect and the statistical aspect as usually done in traditional statistics, and their model size is typically huge, easily fitting perfectly large training datasets as found empirically. A curious question emerges in recent years: can we take some of these difficulties to our advantage and say something meaningful about the behavior of neural networks during training?

In this talk, we present one such viewpoint. In the limit of a large number of neurons per layer, under suitable scaling, the training dynamics of the neural network tends to a meaningful and nonlinear dynamical limit, known as the mean field limit. This viewpoint not only removes a major part of the analytical difficulty — the model’s large width — out of the picture, but also lends a way to rigorous studies of the neural network’s properties. These include proving convergence to the global optimum, which sheds light on why neural networks can be optimized well despite non-convexity, and a precise mathematical characterization of the data representation learned by a simple autoencoder.

This talk will be a tour over the story about two-layer neural networks, a simple two-layer autoencoder, and how new non-trivial ideas arise in the multilayer case. We shall spontaneously draw the analogy from the physics of interacting particles, with some light mathematical contents. This is based on joint works with Andrea Montanari, Song Mei, and Huy Tuan Pham.