Thanh-Thien Le*, Manh Nguyen*, Tung Thanh Nguyen*, Linh Ngo Van and Thien Huu Nguyen

JPIS: A joint model for profile-based intent detection and slot filling with slot-to-intent attention

Motivations:

Recent studies on intent detection and slot filling have reached a significant overall accuracy on standard benchmark datasets. Despite achieving strong performance, most existing studies are solely based on plain text, which may not hold in many real-world situations. For instance, the utterance “Book a ticket to Hanoi” is ambiguous, making it challenging to correctly identify its intent, which could involve booking a plane, train or bus ticket.

Profile-based intent detection and slot filling take into account the user’s profile information, such as User Profile (a set of user-associated feature vectors representing the user’s preferences and attributes) and Context Awareness (a list of vectors that indicate the user’s state and status). While profile-based intent detection and slot filling are two important tasks that reflect real-world scenarios, research into these problems remains under-explored.

How we handle:

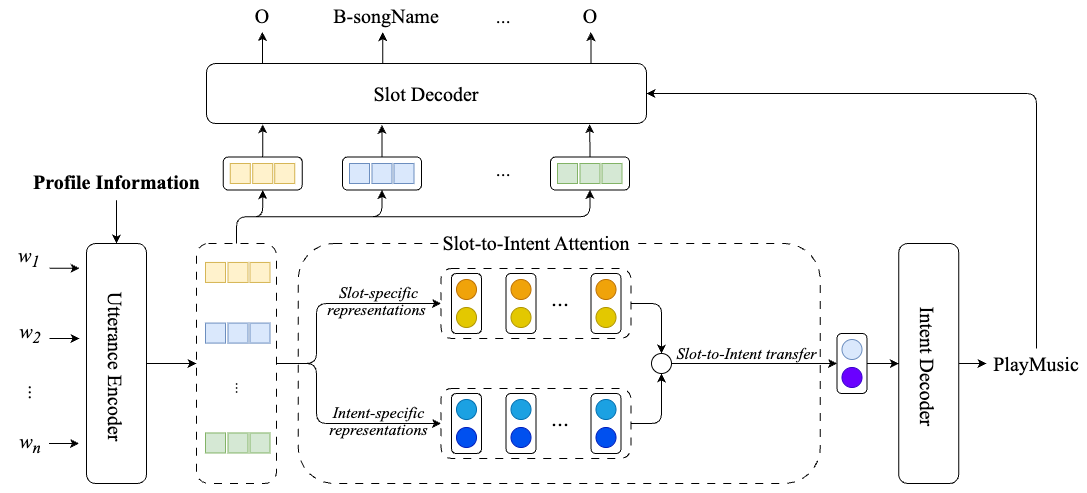

We propose JPIS – a joint model to further enhance the accuracy performance of profile-based intent detection and slot filling. JPIS comprises four main components: (i) Utterance Encoder, (ii) Slot-to-Intent Attention, (iii) Intent Decoder and (iv) Slot Decoder.

- Utterance Encoder: incorporates the supporting profile information to generate feature vectors for word tokens in the input utterance.

- Slot-to-Intent Attention: derives label-specific vector representations of intents and slot labels, then employs slot label-specific vectors to guide intent detection.

- Intent Decoder: predicts the intent label of the input utterance using a weighted sum of intent label-specific vectors from Slot-to-Intent component.

- Slot Decoder: leverages the representation of the predicted intent label and word-level feature vectors from the utterance encoder to predict a slot label for each word token in the input.

How we evaluate:

We conduct experiments on the Chinese dataset ProSLU, which is the only publicly available benchmark with supporting profile information. We experiment 2 settings: with and without utilizing pretrained language models (PLMs). We train models for 50 epochs with 5 different random seeds.

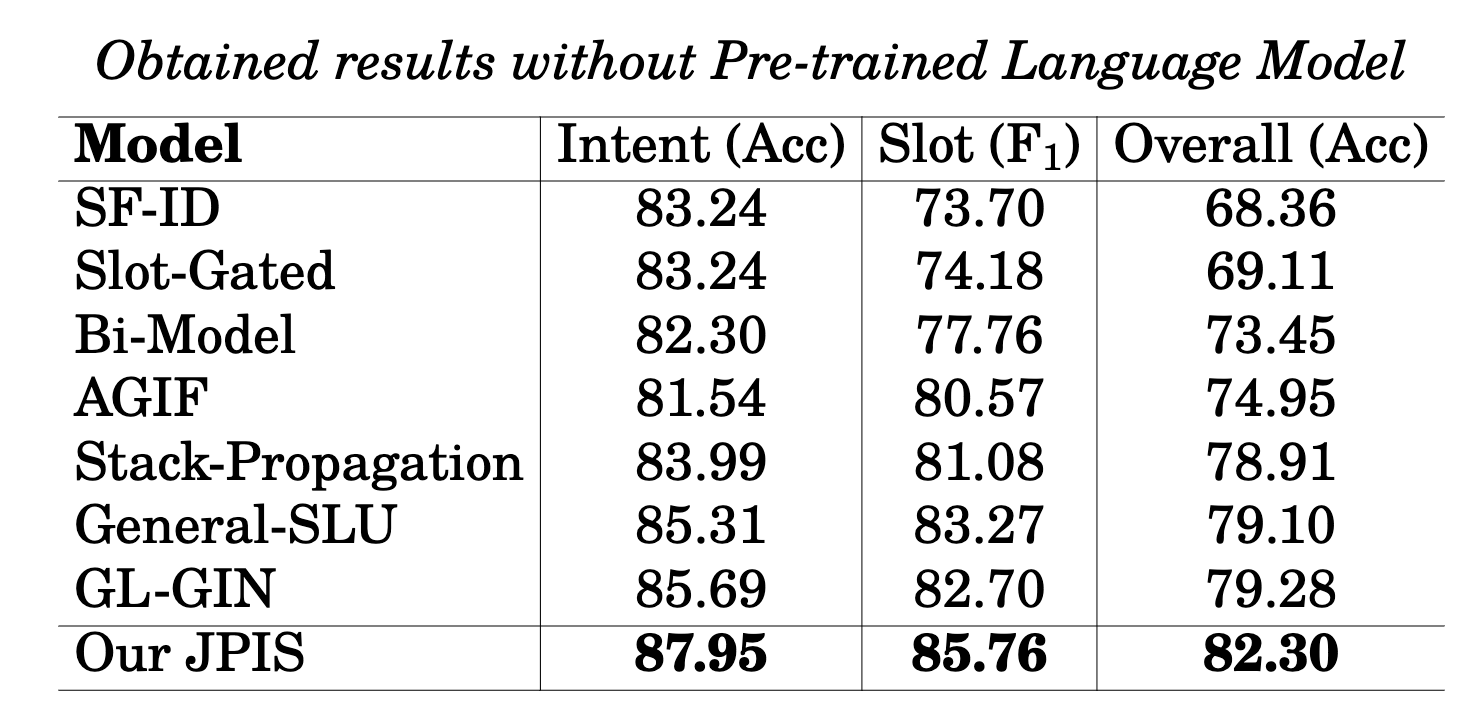

Table 1: Obtained results without PLM

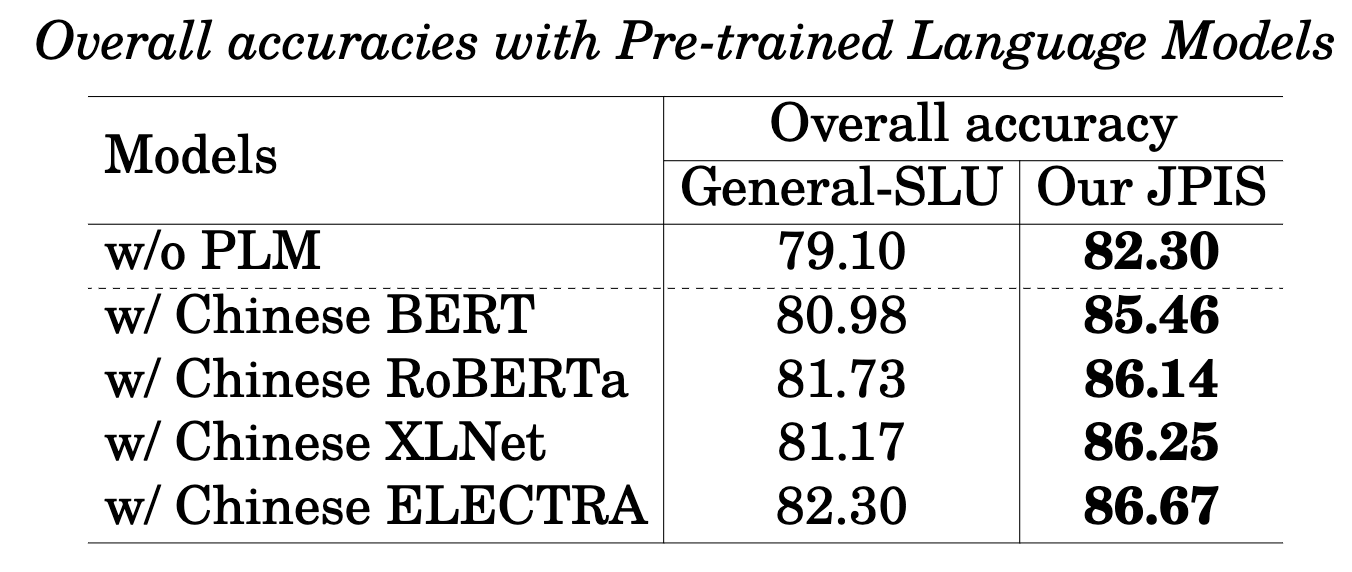

Table 2: Overall accuracies with PLMs

As shown in Table 1, when compared to the previous best results, JPIS achieves substantial absolute performance improvements ranging from 2.5% to 3.0% in all three metrics. This clearly demonstrates the effectiveness of both intent-to-slot and slot-to-intent information transfer within the model architecture. In Table 2, the PLMs notably contribute to improving the performance of both JPIS and “GeneralSLU”. Clearly, JPIS consistently outperforms “General SLU” by substantial margins across all experimented PLMs, establishing a new state-of-the-art overall accuracy at 86.67%.

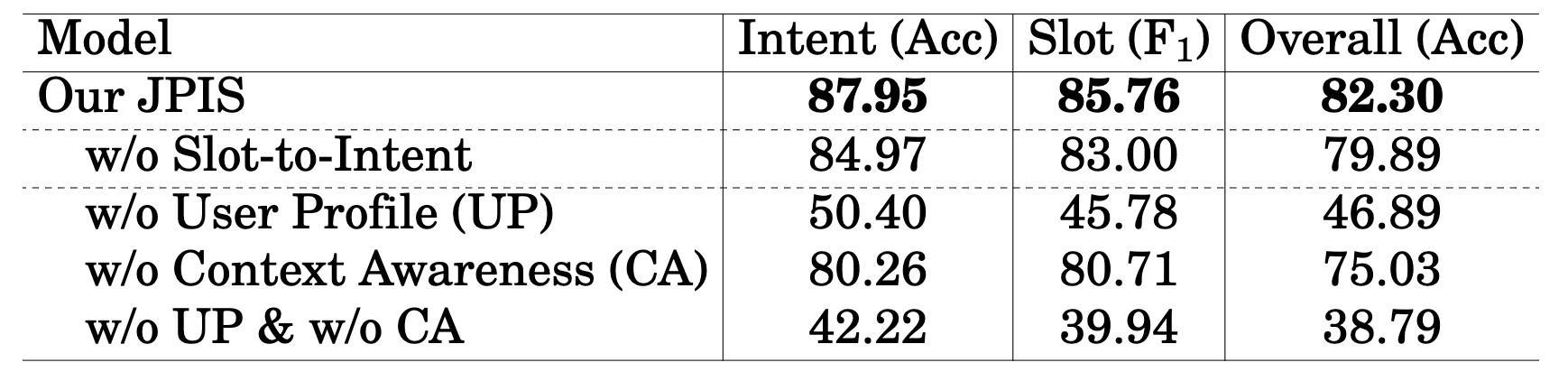

We also conduct an ablation study to verify the effectiveness of the slot-to-intent attention component and the impact of different profile information types on the model’s performance.

Table 3: Ablation study

Clearly, from Table 3, the contribution of the slot-tointent attention is notable and the model incorporates the supporting profile information effectively.

Why it matters:

We have introduced JPIS, a joint model for profile-based intent detection and slot filling. JPIS seamlessly integrates supporting profile information and introduces a slotto-intent attention mechanism to facilitate knowledge transfer from slot labels to intent detection. Our experiments on the Chinese benchmark dataset ProSLU show that JPIS achieves a new state-of-the-art performance, surpassing previous models by a substantial margin.

Read our JPIS paper (accepted at ICASSP 2024): https://ieeexplore.ieee.org/abstract/document/10446353

The JPIS model is publicly released at: https://github.com/VinAIResearch/JPIS

Overall

Thinh Pham, Dat Quoc Nguyen

VinAI Research, Vietnam

Share Article