Thinh Pham, Dat Quoc Nguyen

VinAI Research, Vietnam

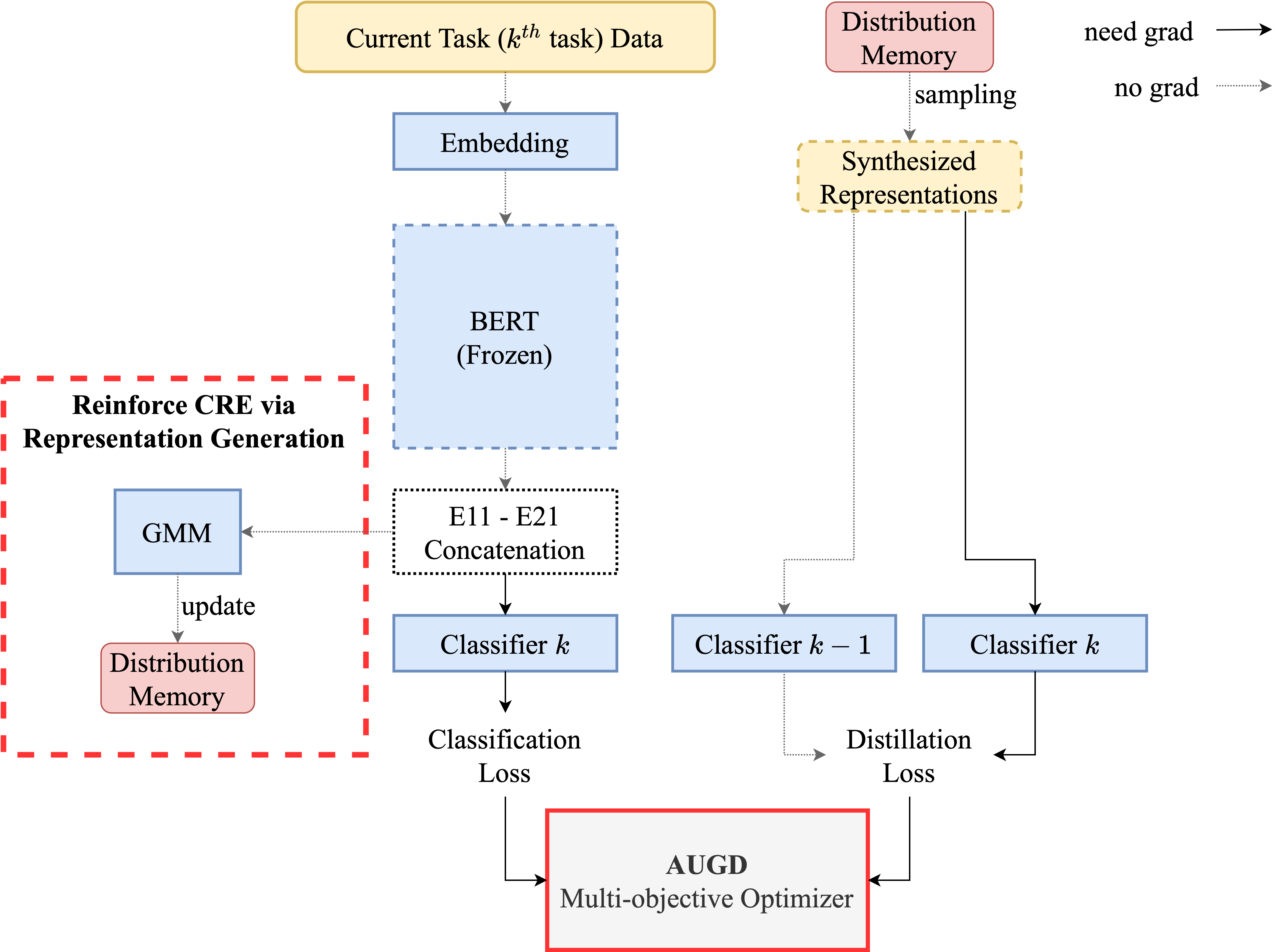

Figure 1: Overview of CREST

In Natural Language Processing, Relation Extraction is the task of classifying the semantic relationships between entities/events in text into predefined relation types. Nonetheless, conventional relation extraction encounters challenges in dynamic environments characterized by a continuously expanding set of relations. This realization has prompted the development of Continual Relation Extraction models, which recognize the ever-changing nature of information in practical settings. In this paper, we present a novel CRE approach named CREST.

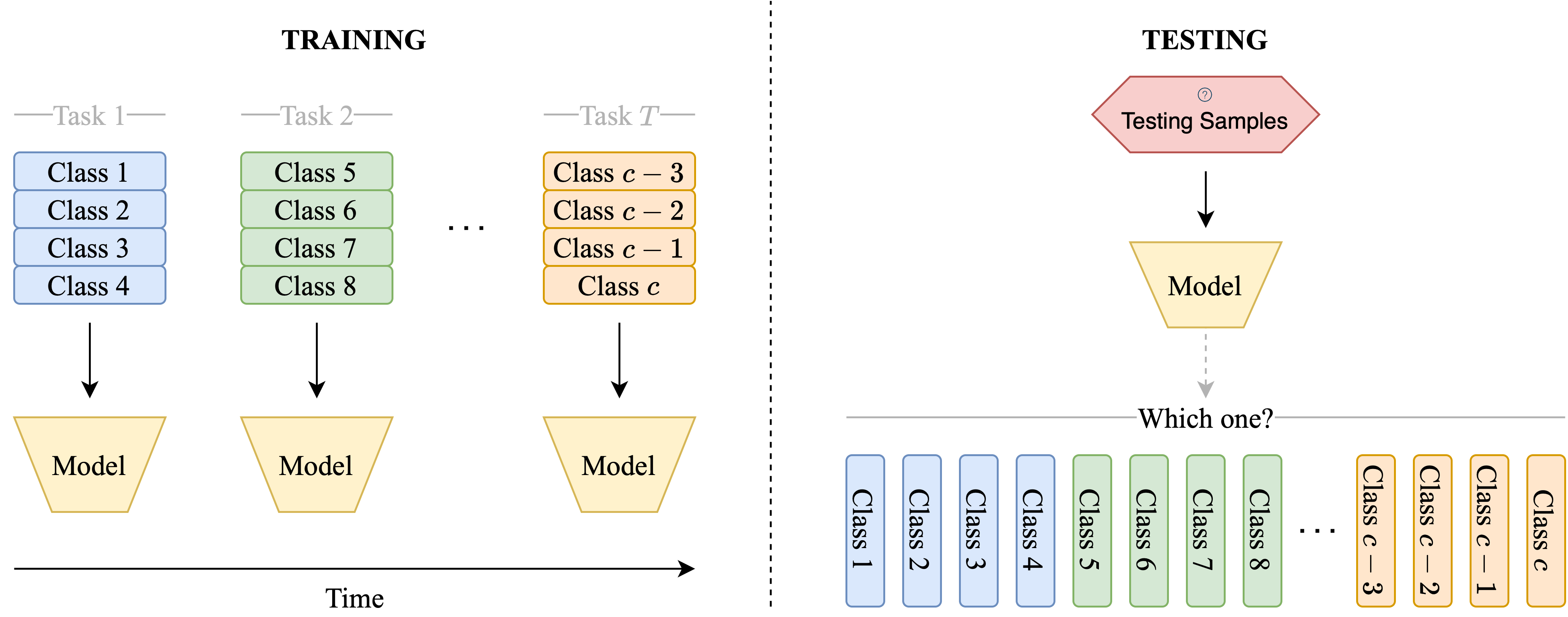

Figure 2: Training and testing setting of CRE

Continual Relation Extraction involves training a model, sequentially, on a series of tasks, each with its own, non-overlapping training set and corresponding relation set. For ease of understanding, each task can be perceived as a conventional relation extraction problem. The aim of Continual Relation Extraction is to develop a model capable of acquiring knowledge from new tasks while maintaining its competence in previously encountered tasks.

Previous CRE approaches [1, 2, 3] have achieved impressive results using memory-based techniques. These methods retain a fraction of learned data in a small memory buffer, allowing the model to reinforce its past knowledge while learning new relations. Despite their success, state-of-the-art CRE methods persist two lingering issues:

The first issue is their reliance on a memory buffer (Issue No. 1). In light of the diverse potential applications of CRE, many of which might involve highly confidential data, there are significant concerns regarding storing data in the long term while maintaining stringent privacy standards.

Another problem that arises from such methods is the task of handling multiple objectives during replay (Issue No. 2). For example, Hu et al.’s [1] method involves InfoNCE contrastive loss [4] and contrastive margin loss, while Zhao et al.’s [2] approach involves supervised contrastive loss and distillation loss. These methods over-simplistically aggregate these losses by weighted summation, hence overlooking the inherent, complicated trade-offs between the objectives.

A notable obstacle in replay-based CRE, and also in replay-based Continual Learning in general, arises from the limited size of the replay buffer in contrast to the continuous accumulation of data. This situation, apart from generating concerns about compromising privacy, introduces the risk of model overfitting to small memory buffers, thereby weakening the efficiency of replaying.

To address these issues and diversify memory buffers, generative models prove effective by synthesizing representations for each relation type. Since we keep the BERT encoder frozen during training, which eliminates any changes to the representations after each updating step of the model, we can directly fit the generative model to the relation representations of all the data. This can be much more practical and feasible than fitting the model to the original embedding matrices of the text instances.

After training the model on task , for each relation type

, we use a Gaussian Mixture Model (GMM) to learn the underlying data distribution of the relation representations

corresponding to the data from that specific label and store this distribution for future sampling. In the next task (

), for each relation type

, we use its corresponding learnt distribution to sample

synthetic relation representations:

where is the number of GMM components;

,

and

are the mixing coefficient, mean and diagonal covariance of

Gaussian distribution, respectively.

The generated set will facilitate the model in reinforcing its previous knowledge via knowledge distillation. The distillation loss facilitates continual learning by transferring knowledge from previous tasks to the current task, enabling the model to retain previously learned information and avoid catastrophic forgetting.

The training process of our model is a Multi-objective Optimization (MOO) problem, where we have to minimize two objectives simultaneously: the conventional relation extraction loss and the knowledge distillation loss

. State-of-the-art Multi-task Learning (MTL) frameworks [5, 6, 7, 8] can be employed to address this problem.

Nevertheless, it is essential to recognize that the current MTL frameworks were not originally developed for continual learning, and there are fundamental differences between the two paradigms. Specifically, at the beginning of training a new task, the objectives associated with maintaining performance on previously learned tasks are already in a better state than the objective related to learning new knowledge; however, current MTL approaches do not have mechanisms to leverage this information since they were not designed to handle such unique challenges encountered in continual learning settings.

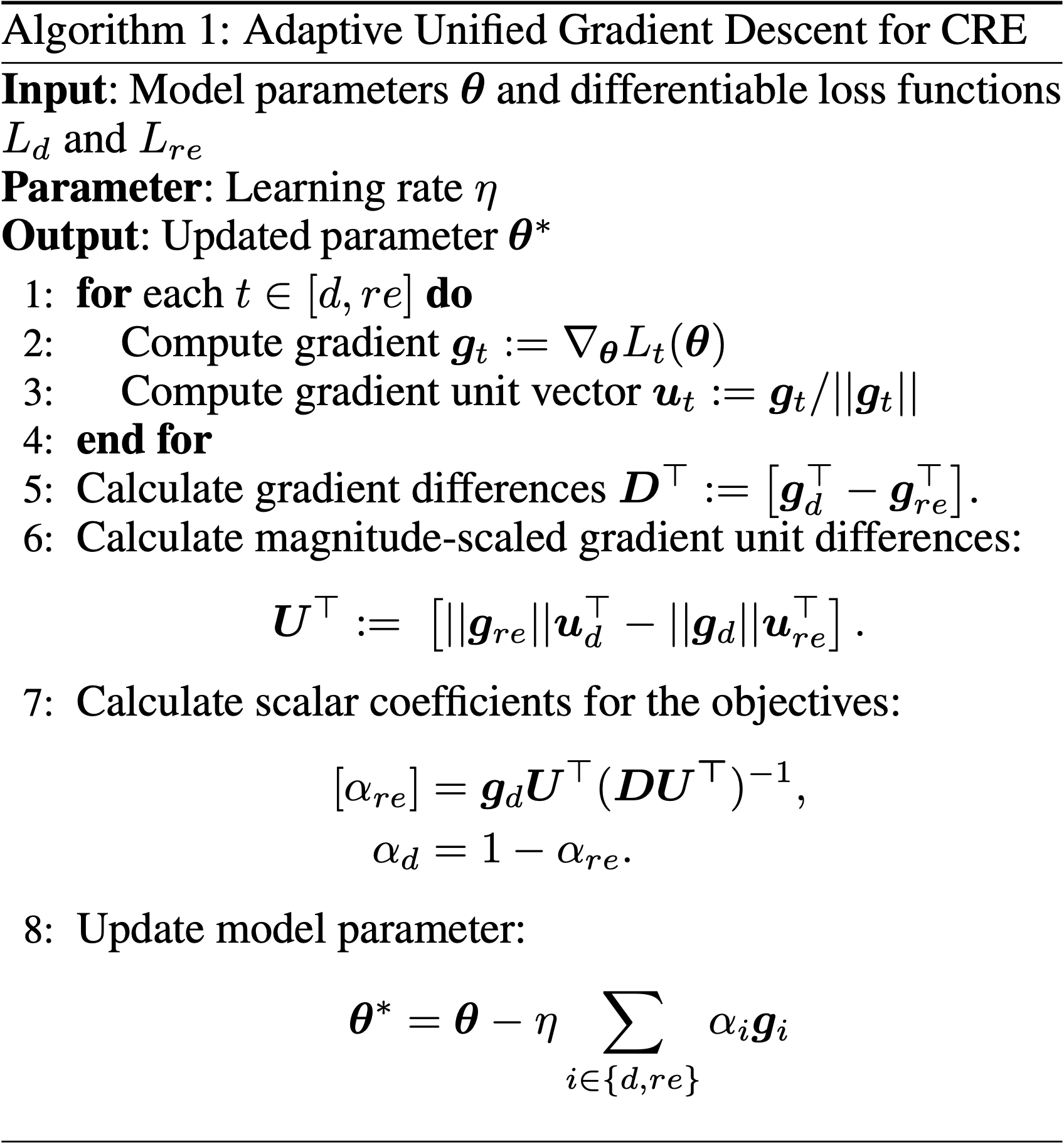

To solve this issue, we propose a novel gradient-based MOO algorithm, Adaptive Unified Gradient Descent, which allows the learning process of the model to recognize the difference in magnitudes of different gradient signals, thereby prioritizing acquiring new knowledge.

Algorithm 1: Adaptive Unified Gradient Descent

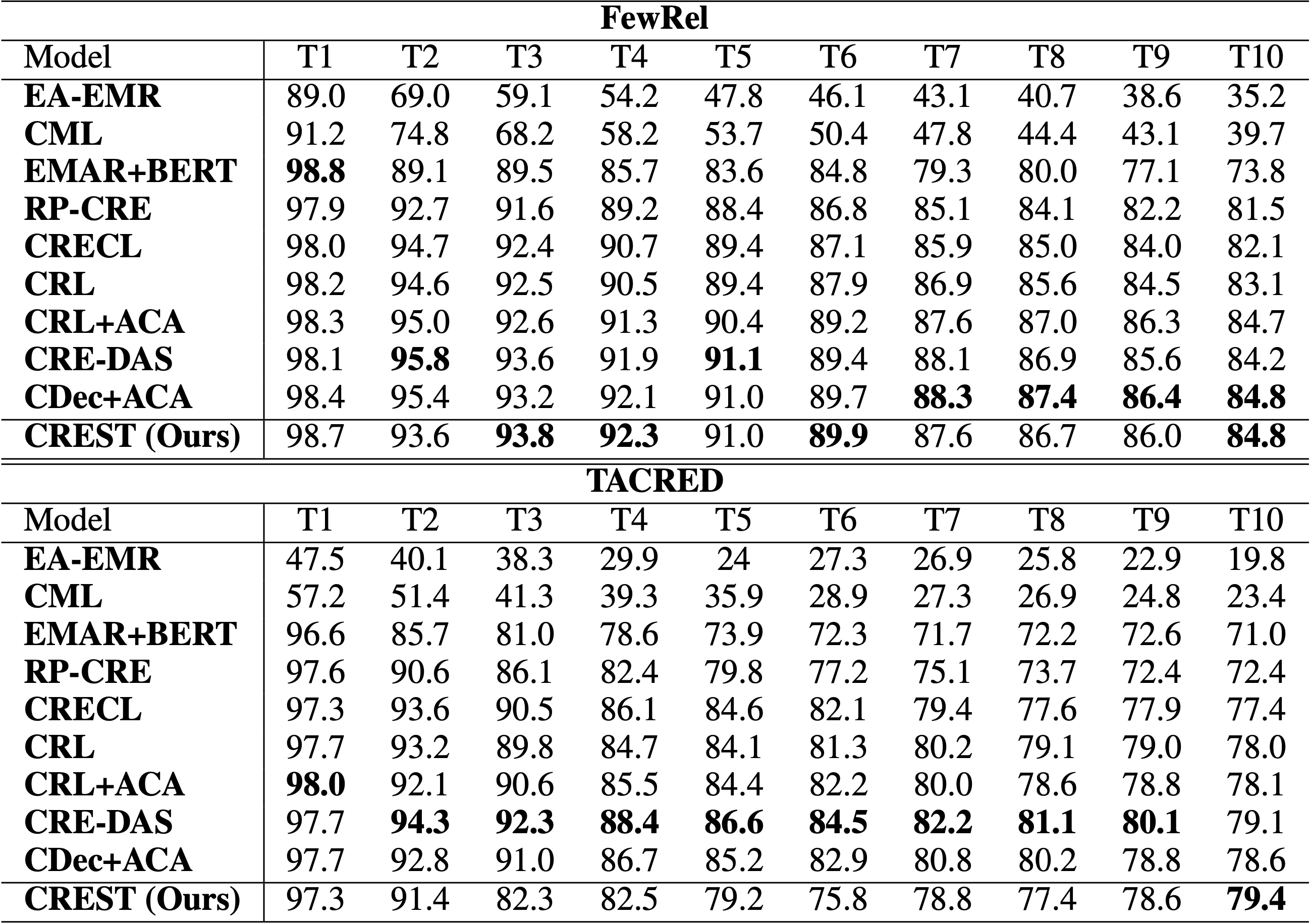

How our method compares to the current most successful CRE baselines:

Table 1: Performance of CREST (%) on all observed relations at each stage of learning, in comparison with SOTA CRE baselines.

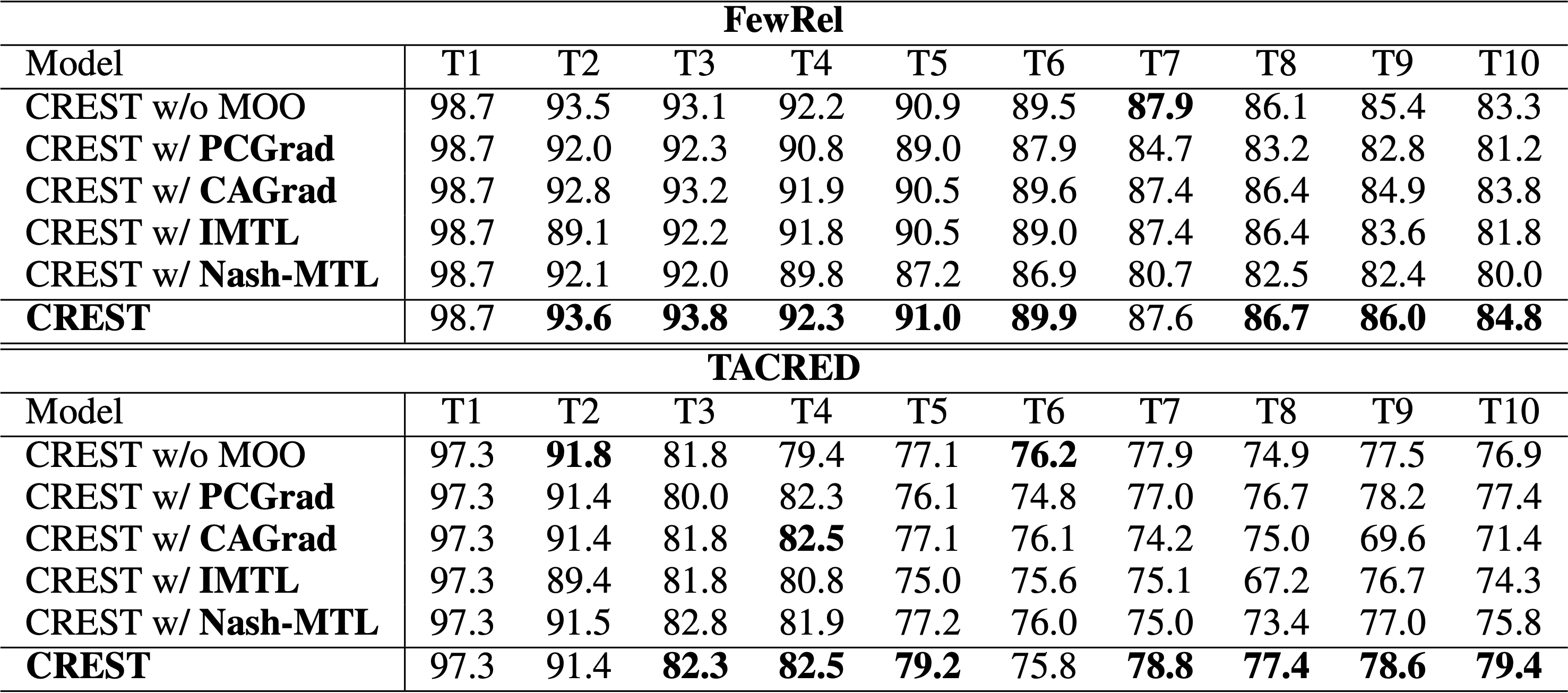

To examine the effectiveness of our novel gradient-based MOO method designed for Continual Learning (CL), we have benchmarked our method in comparison against other SOTA MOO methods [5, 6, 7, 8]. The empirical results are presented in Table 2.

Table 2: Results of ablation studies on different MOO methods when applied to CREST.

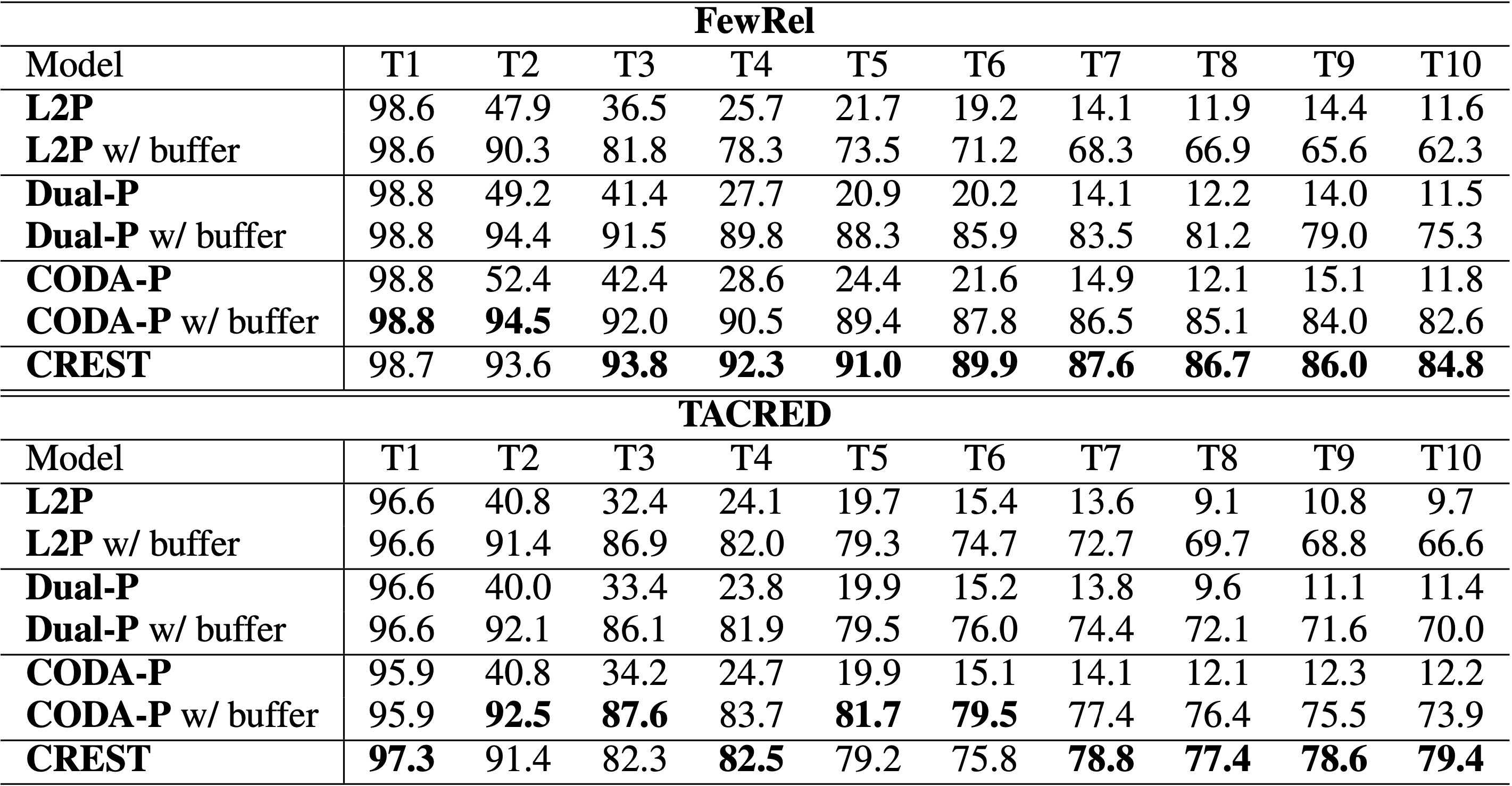

Recently, prompt-based methods for Continual Learning (CL) [9, 10, 11] have emerged as rehearsal-free and efficient-finetuning CL approaches, gaining attention due to their remarkable success in computer vision tasks, even surpassing SOTA memory-based methods. In similarity to CREST, these prompt-based methods neither require full finetuning of the backbone encoder nor rely on an explicit memory buffer. As such, it becomes essential to conduct a comparison between CREST and these prompt-based continual learning methods. So, how do we stack up against them?

Table 3: Comparison of our method’s performance (%) with state-of-the-art rehearsal-free Continual Learning baselines.

Overall

Thanh-Thien Le*, Manh Nguyen*, Tung Thanh Nguyen*, Linh Ngo Van and Thien Huu Nguyen

Share Article